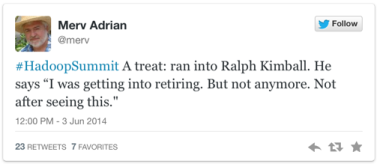

Big Data. Hadoop. Cloud Computing. The Internet of Things. What an amazing time it is in the world of data. And from a data management perspective, what a disruptive time. The traditional enterprise data warehouse and all of the tools and infrastructure that go with it are being reimagined in the era of Social, Mobile, Analytics, Cloud and the Internet of Things (SMACT). Ralph Kimball, principal author of the best-selling books “The Data Warehouse Toolkit,” “The Data Warehouse Lifecycle Toolkit,” “The Data Warehouse ETL Toolkit” and “The Kimball Group Reader” is now doing webinars with Cloudera. Check out this tweet from Gartner’s Merv Adrian, who keynoted this summer’s Hadoop Summit in San Jose:

Go Ralph!

But as legacy application integration vendors attempt to become data visualization and analytics vendors, and legacy data integration vendors are having the viability of their RDBMS-centric rows and columns-based approach questioned by the volume, variety and velocity of the Big Data era (not to mention the shift to cloud computing), I’m reminded that with all of the buzz about what’s new and what’s coming, it’s also an extremely challenging time for last generation’s technologies. That’s why 2014 is such a dangerous time for enterprise CIOs to sign on the dotted line for an enterprise license agreement (ELA) with these companies.

With so much technology disruption and change happening in the world of data, and much more to come (regardless of whether you call it “data wrangling,” “data preparation,” “data blending” or good old “data integration”) why would anyone stifle data innovation by locking into an ELA with a legacy data management software vendor? It would be like making a big bet on Cobol in 1998. It’s a recipe for what I call Innovation Prevention, and that’s not a reputation any CIO wants in 2014.

If you think about the value proposition of an ELA, it can be boiled down to a mindset of “sunk cost” vs. “sunk value” when you consider the impact of the discount you believe you’re getting.

Like investing in COBOL in 1998

When the technology bubble burst in 2001, almost overnight we saw the conversation move from innovation toward standardization and consolidation. Unlike many other technology markets, data management and analytics vendors weathered the storm fairly well as operational excellence and measurement became essential ingredients of survival. We saw business intelligence (BI) vendors pivot to corporate performance management as Sarbanes Oxley and other regulations became the focus and centralized IT organizations were tasked with driving cost out of the business. The BI vendors themselves soon became acquisition targets as access to timely, relevant and actionable information rose to the top of the CIO priority list and the idea of “one throat to choke” was a compelling value proposition.

These were the glory days of ELAs for data management vendors. I remember a Business Objects customer comparing line of business data marts to the popular carnival game “Whack-a-Mole,” requesting more standardization, not more innovation. Just as Software as a Service (SaaS) was about to introduce a completely new set of data silos, many enterprise IT groups had become the “Department of No.” From a data management vendor perspective, the choice was either to get acquired or make acquisitions and grow the list of on-premises products they could sell to their installed base.

Take a look at the research and development spend as a percentage of revenue for the legacy data management vendors that have managed to avoid being acquired, and you’ll quickly see that innovation has not been a primary growth driver.

Enterprise IT “cloudification” and dealing with data gravity

As the technology market goes through what Gartner calls “the secular megatrends,” IT organizations are now focused on two primary initiatives: “cloudification” and dealing with “data gravity.” Which of our business applications and platforms is moving to the cloud next and where will our data — big and small — reside as more and more of our corporate data and infrastructure runs in the cloud? IT organizations are being asked for more self-service from the business and they’re looking for new ways to drive latency out of the business.

Meanwhile, a new breed of technology vendors has emerged that are built specifically to handle the SMACT requirements. Visionaries like Marc Benioff, Aaron Levie and Mark Zuckerberg learned the lessons of Clayton Christensen early in their careers and understand that they must build cultures of continued innovation to remain relevant and survive.

The world of data management is no longer a structured world. It’s no longer a world of rows and columns. The organizing principles of data warehousing are now being rewritten as we prepare for the impact of Hadoop, NoSQL databases and data warehousing in the cloud. So from a data management technology perspective in 2014, whether it’s Big Data, data integration, data quality, master data management (MDM) or data visualization, the last thing you want to do is end up with one throat to choke that is already dead.

Just as Hadoop and cloud computing are disrupting traditional approaches to data warehousing, Web standards like REST and JSON are replacing XML and SOAP. And when it comes to traditional business applications, as Geoffrey Moore and others have pointed out, thanks to the SaaS model and the rise of gamification, collaboration, mobile and other business and social trends, your critical data increasingly resides in more dynamic systems of engagement (Salesforce, Workday, Zuora, etc.), not just finance-focused systems of record. Similarly, in the data world we’re seeing:

- New tools emerging with a more consumerized user experience delivering powerful data visualization, data discovery and predictive analytics

- Real-time streaming expectations of information consumers who are no longer satisfied with real-time being a 24-hour refresh

- Hadoop, NoSQL databases and other new technologies being deployed in the enterprise that are better suited for managing the onslaught of machine-generated, unstructured data that was never meant to be stored in a structured enterprise data warehouse

- Rigid on-premises master data concepts and technologies that were built for supreme IT governance and control being disrupted by more agile approaches

- Legacy ETL tools struggling to find relevance for integrating SMACT data sources

- Heavy-weight ESB and middleware tools struggling to connect cloud-based data sources, not to mention the expectations of a new breed of “citizen integrators.”

What to do next?

My recommendation to CIOs in 2014 is to wait and watch. Develop a best-of-breed mindset and, when it comes to your data, focus on the innovation possibilities not just your traditional integration and data management options. There are a lot of great new companies establishing themselves as enterprise-grade, but be sure to dig into the technology, as “cloud washing” continues to plague our industry.

New data management technologies are hot and getting hotter in Silicon Valley. Why not consider connecting with the top venture capital (VC) firms and have them curate their portfolio based on your needs?

And when it comes to your incumbent data management vendors trying to push for an ELA, watch out for mercenary sales tactics. Focus on sunk value versus sunk costs and dig into their R&D spend as a percentage of revenue, particularly on their core technologies, which are often only integrated with their acquired technologies at the PowerPoint level.

Don’t let a 2014 data management ELA with the long-term financial discount that comes with it lead to long-term Innovation Prevention and a data integration solution that won’t be viable in the future.

Darren Cunningham is vice president of marketing at SnapLogic.