Gaurav Dhillon is the Chairman and CEO of SnapLogic, overseeing the company’s strategy, operations, financing, and partnerships. Having previously founded and taken Informatica through IPO, Dhillon is an experienced builder of technology companies with a compelling vision and value proposition that promises simpler, faster, and more cost-effective ways to integrate data and applications for improved decision-making and better business outcomes.

M.R. Rangaswami: As we’re heading into the new year, how can leaders begin to make room in budgets to take advantage of AI?

Gaurav Dhillon: As generative AI continues to be the topic of conversation in every boardroom, the question board members are asking leaders is not whether they can afford to invest in generative AI but what they will lose if they don’t. As AI-driven technologies continue to expand in reach,, there is a new baseline for business operations, which includes evolving customer expectations. Any sophisticated task with the potential to be automated will be automated.

Companies must adapt to maintain a competitive edge, and until a company strategically harnesses AI, it will struggle to meet the industry’s new productivity standards. As organizations begin to prepare for AI implementation, it’s important for them to prioritize reducing their legacy debt—or what is commonly known as technical debt.

The challenge with legacy tech stacks is that they are built around older and outdated languages and libraries, which inhibit an organization’s ability to successfully integrate new applications and systems, including GenAI tools. Modernizing infrastructure is key to ensuring enterprise data is ready for widespread AI adoption and use across the business. AI adoption is increasingly becoming integral to a company’s relevance, efficiency and effectiveness.

M.R.: What do you believe are the biggest inhibitors to AI adoption in the workplace?

Gaurav: The biggest inhibitors of AI adoption in the enterprise are rooted in the fact that people look at consumer AI tools like ChatGPT and make comparisons to their own products. AI is fueled by data, and enterprise AI needs to have guardrails on what type of data it can access. Today, we are still at the hunter-gatherer stage with business data.

Another inhibitor to AI adoption for organizations is security. Ideally, businesses want to leverage AI tools to ask questions about customers, but in order to get to this stage, organizations first need guardrails to ensure that the data is handled and accessed securely. The stakes for consumer AI are low because if you ask ChatGPT to write you a recipe for dinner and it turns out bad, you lose a meal. The bar for enterprise AI is much higher; if a customer looks to your business for answers and solutions, people’s jobs can be at stake.

M.R.: As a two-time founder, what key lessons have you learned that you believe every leader should be aware of, especially in the midst of today’s AI revolution?

Gaurav: The hardest lesson I’ve had to come to terms with is that product market fit is a scientific art. Companies can do and build amazing things at scale, but that alone won’t determine or define its success. Closely engaging with and listening to early adopters and customers is the only way successful business leaders can discern and establish what the ideal product-market fit is. As a founder and entrepreneur, it’s critical to be a part of this exploration from the very start. While motions like scale can be delegated, product market fit cannot.

Patrick Thean isn’t a boxer, but he loves to quote Mike Tyson in saying, “Everyone has a strategy until I punch them in the mouth.” Through his years as a CEO, serial entrepreneur, and coach to other company leaders, he has become an expert not only in crafting visionary strategy, but in executing with mastery.

Patrick is a USA Today and Wall Street Journal bestselling author. With his book Rhythm: How to Achieve Breakthrough Execution and Accelerate Growth, he shares a simple system for encouraging teams to execute better and faster. He reveals early signs of common setbacks in entrepreneurship and how to make the necessary adjustments not only to stay on track, but also to accelerate growth.

His work has been seen on NBC, CBS, and Fox. Patrick was named Ernst & Young Entrepreneur of the Year in 1996 for North Carolina as he grew his first company, Metasys, to #151 on the Inc 500 (now called the Inc. 5000).

Currently the CEO and Co-Founder of Rhythm Systems, Patrick Thean is focused on helping CEOs and their teams experience breakthroughs to achieve their dreams and goals.

M.R. Rangaswami: Crafting a compelling vision is often cited as a critical aspect of strategic leadership. How do you recommend leaders go about developing a clear and inspiring vision for their organizations, and what are the key components that should be included in a well-defined vision statement?

Patrick Thean: If you want to create a compelling vision, you first need to change how you approach strategic thinking. Strategic thinking should not be something you do randomly or squeeze into action-focused meetings. You need to get into a Think Rhythm. Start having regular Think sessions where you and your team reflect on your past achievements and challenges and imagine an inspiring future together.

During your Think sessions, you really have to step back from daily operational work and focus on the future of your business. Make it clear to your team that this time is for thinking only – not for finalizing goals or jumping into action. Play around and have fun brainstorming! Don’t shoot any ideas down.

When it comes to crafting a vision, use your Think sessions to dream big. Let your imagination run wild as you imagine what your company could look like five, ten, or even twenty years from now. Experiment with exercises like the Destination Postcard (which asks you to envision your company one year from now, but can be adapted to longer amounts of time). Be specific and include elements like the impact you want your company to make and the growth you want to achieve.

Once you and your team have talked through these ideas and have gotten excited about a shared vision, craft a vision statement that will inspire the rest of your employees to step boldly into the future with you. Avoid corporate buzzwords and “fluff” (marketing language). The vision should be easy to read, and it should connect with people’s hearts. You want the rest of your company to feel just as excited about the future as you are!

M.R.: Once a vision is crafted, what strategies do you recommend for fostering alignment across different teams and departments to achieve this vision?

Patrick: Alignment starts at the very top. The CEO and leadership team need to clearly and repeatedly communicate the company’s vision to all other employees. And as you’re doing this, you can enter your second Rhythm – the Plan Rhythm.

During the Plan Rhythm, you need to come together with your leadership team every quarter and every year to discuss, debate, and agree on priorities that move the company in the right direction. Each person on the team should know what they are responsible for accomplishing. Break each priority down into key tasks or milestones to avoid falling into the strategy execution gap.

Then you will cascade the company’s plan down to the departments. They will follow the same planning process to agree upon their own priorities, which align with and support the company’s goals. Teams need to talk cross-departmentally, too, to ensure alignment is horizontal as well as vertical. They need to plan for smooth project hand-offs to avoid waste, rework, and worst of all: disappointed customers.

Alignment isn’t just important when it comes to executing a plan with your team. Cultural alignment is important, too. Everyone on your team needs to be aligned with your company’s core values and have the right mindsets. This will ensure that they are behaving in ways that create the kind of work culture you’re trying to foster. If they’re seriously misaligned, you might see behaviors that create tension among the team or spin a priority off its track.

Even when you have a team of A-Players who are aligned on your core values and aligned on a plan, you need to keep realigning week after week by getting into a Do Rhythm. Hold Weekly Adjustment Meetings to discuss the progress of your top priorities. This practice will give your team thirteen opportunities to take action and reorient when your goals are veering off track.

M.R.: What advice do you offer to leaders striving to cultivate a high-performance mindset within their teams, particularly during times of change or uncertainty?

Patrick: If you are leaving performance conversations to once or twice a year, you are actually decreasing employee engagement. Nobody wants to wait six or twelve months to hear what they’ve been doing well and what they need to work on. A disengaged employee doesn’t perform well and is more likely to leave, which costs valuable organizational knowledge, time recruiting and training a new hire, and of course – money.

You need to take a proactive approach to performance instead. Make sure every person on your team, from the C-suite to the frontline employee, understands their role and responsibilities. I recommend using Job Scorecards to make this clear and easy to understand for employees and managers. When people know what is expected of them and what goals they should be working towards, they’re more engaged and they do better work. They also don’t waste time working on the wrong things that won’t really benefit the company. When performance reviews roll around, they will already understand what they’re going to be rated on, because they’ve been working on it the whole time in accordance with their Job Scorecard. This takes much of the fear of the unknown out of the process.

Week to week or month to month, managers should be checking in with their employees by holding 1:1s. A regular 1:1 cadence encourages transparency and accountability. It’s a candid conversation that prompts ongoing feedback in both directions. Managers should also use these meetings to provide coaching and help employees grow their skills and careers.

This is especially important during times of uncertainty, when employees may start to question their job security. If the line of communication is open between manager and employee, you help reduce your employees’ fear of being blindsided by bad news. And when managers are focused on growing and developing their people, employees will feel cared for and engaged. They will do their jobs much better than they would if they were kept in the dark about their own performance.

M.R. Rangaswami is the Co-Founder of Sandhill.com

James Webb says the difference between success and failure often comes down to whether the person thinks big in the early stage of the business.

Author of Redneck Resilience: A Country Boy’s Journey To Prosperity, James is an investor, philanthropist and successful multi-business owner. He began his entrepreneurial journey in the health industry as the owner of several companies focused on outpatient medical imaging, pain management and laboratory services.

Following successful exits from those companies, James shifted his focus to the franchise world and developed, owned and oversaw the management of 33 Orangetheory Fitness® gyms, which he sold in 2019. Not one to stop, he currently has two additional franchise companies in various stages of growth.

His insights as a life-long entrepreneur offers great insights for those looking to branch out with building businesses they own, and connecting it with their big-picture plan.

M.R. Rangaswami: What are the top two most common missteps a young entrepreneur makes in their first two years of business?

James Harold Webb: There are many mistakes an entrepreneur can make during the start-up stage of their business. Taking money “off the table” too quickly can lead to an assortment of problems, including holding back building your infrastructure, expansion, and cash shortage. Other than my “salary” (if needed), I tend to leave all the money back in the business for several years. The only exception to that is determining any income tax consequences and taking what I call a “tax distribution.” Solely for the purpose of paying the prior year’s income taxes or quarterly income tax payments.

I see too many 8to5ers who are not putting in the time or effort it takes to get a business off the ground and profitable. When you are ready to stop for the day, make one more phone call or send out one more email. Solve one more problem. Unbox one more package. Whatever it takes, just work harder than anyone else.

M.R.: How important is a leadership team in the early stages of building a business? What (if any!) budget should people allocate to that leadership team?

James: Leadership is one of the key elements of a successful business. Creating a corporate culture from the beginning is crucial. Establishing relationships is also extremely high on the leadership list, whether it be with fellow corporate staff, employees, vendors, banking, or even competitors. Listen to people. Invest in people. Take the time to recognize people and to hold yourself accountable to them. Relationships will define your success.

M.R.: How can someone who is just starting their business beat the odds and not fail in the first five years?

James: Work harder than anyone else.

Hope for the upside, but always plan for the downside. Stay focused on your upside and driving your business to success, but have a contingency plan for the “what ifs.”

Build a solid infrastructure before you reap the benefits of your venture. Find the right people who are dedicated to helping you reach your dream of success.

With employees, be clear in your expectations, hold them accountable, and be available to assist and direct as needed. Contrary to popular belief, you can be a boss and a “friend.” If they can’t get it done and you’ve done the previous, then it’s time to let them go.

M.R. Rangaswami is the Co-Founder of Sandhill.com

2023 was undoubtedly the year that AI barnstormed our tech consciousness. Trained on massive amounts of public data; AI generated cool new images, wrote up content summaries, and delivered seemingly original work in the blink of an eye. Could this also be the future of helping companies balance the need for sustainable, green innovation against resource and supply chain constraints?

Artificial intelligence offers promise for accelerating materials/formulation R&D. But AI for science needs to be uniquely focused, applying small, curated use-case AI models mapping to multiple scientific principals at a time, to speed scientific discovery. This has the potential to be a game-changer across a wide range of fields, including medicine, agriculture, engineering and more, which is why Sunil believes that 2024 will be the year for specialized AI.

Sunil Sanghavi is currently CEO of NobleAI, a pioneer in Science-based AI solutions for chemical and material informatics. He has a rich and diverse operating background in deep-tech companies over 40 years. Most recently, he was Senior Investment Director at Intel Capital, where he invested in AI/ML hardware and software companies including Motivo, Untether AI, Syntiant, and Kyndi. He attended the MSc Chemistry program at the Indian Institute of Technology Bombay and obtained a BSEE from Cal Poly, San Luis Obispo.

M.R. Rangaswami: You have an impressive resume leading a variety of companies. What led you to NobleAI at this time?

Sunil Sanghavi: Generative AI dominated the discussion in 2023, and will certainly continue to be a fascinating area to watch. At this point most people have experimented with the many available LLM-based tools and understand how they can help us with everyday tasks. But what I find most exciting is the opportunity to apply AI to speed scientific discovery. Science based AI (SBAI) has the potential to be a game-changer across a wide range of fields, including chemistry, materials, energy and many others to speed scientific discovery.

That area is very exciting to me and is what drew me to NobleAI, where we’re showing the power of Science-Based AI (SBAI) to help companies achieve their goals. As opposed to large language models (LLMs), which is what GenAI is (basically scraping massive amounts of publicly available data), SBAI uses SSMs or Smaller Science-infused models where we apply the power of AI to private, industry- or company-specific data sets, and add to that applicable scientific laws and any available simulation data. This elegant process presents incredible opportunities for advancements to develop or improve chemicals, materials and formulations while also tackling pressing issues for companies like cost, supply chain and customer satisfaction. And unlike LLM-based solutions, SBAI is an optimized ensemble of models, optimized for each specific use case. Our ability to do this for literally hundreds of use cases in 3 or so person-months each and at a deterministic cost is what allows us to offer customized solutions while being able to scale NobleAI’s business.

M.R.: What are the challenges to innovation using SBAI?

Sunil: As is the case with any technological advances, it’s a change in mindset which will be the most immediate challenge. Scientists and researchers are trained to advance or eliminate solutions based on empirical experimentation. This can be cost-prohibitive, and is always by its nature time-consuming and limited in scope. In fact, research into chemical and specialized materials … an industry that spends $ 100 billion per year on R&D … has not experienced much innovation in the past 50 years for this very reason. Developing chemicals and materials is incredibly complex, often requiring experimentation across a multitude of parameters so that researchers can understand interactions of hundreds of different ingredients interacting at scales ranging from molecular to formulations. But now, AI for science is opening the door to a better approach and NobleAI is leading the charge. The goal is to use AI to more rapidly explore a greater range of chemicals and materials in software (i.e., before going to the lab) saving potentially months or even years of R&D time.

M.R.: Where do you see this really taking off first? What are the emerging trends that are most exciting?

Sunil: To me the most exciting possibilities are in the area of sustainability. There’s a big push to improve the safety of material ingredients for both the environment and human health. For instance, more people, organizations and regulators are now talking about the need to replace forever chemicals. But anytime there’s a need to replace an ingredient it can be a real challenge for companies to find substitutes. That’s why you often see the knee jerk reaction to fight a new environmental regulation. But the great thing about Science -Based AI is that we can turn that around. We can support companies and get behind sustainability initiatives. SBAI can not only help companies stay ahead of the shifting regulatory environment but we can support companies to get behind sustainability initiatives. I call what we do “Good AI, For Good”.

M.R. Rangaswami is the Co-Founder of Sandhill.com

Software Equity Group’s annual report is in, revealing that SaaS is here to stay.

As the report details, many in the technology industry, the story of 2023 was all about artificial intelligence, its rapidly advancing commercial applications, and the speed and extent with which it will impact the world we live in, both from a business and personal perspective.

4 SaaS components of 2023 that will impact what we see in 2024.

1. The advancement of generative AI and its impact on software and SaaS companies, both as users and creators of AI, was a top story in 2023 and one that will be front and center in 2024 as well.

However, quietly and perhaps a bit behind the scenes, another storyline proved to be just as important in 2023: the resilience of the U.S. economy and subsequent cementing of software and SaaS’s place as a key pillar driving digital transformation globally.

2. Inflation decreased by nearly half (with the CPI dropping from 6.5% in December 2022 to 3.4% in 2023), interest rates stabilized, and the labor market remained strong (unemployment rate a 3.7% with 216k jobs added in December).

3. Software and SaaS companies pivoted towards operational efficiency, and fortunately for the U.S. economy, many of these companies were successful in this endeavor. The result was a fantastic year for the SEG SaaS IndexTM, with the Index increasing 34% YOY, outpacing the S&P 500 and Dow Jones, and trailing only the Nasdaq (43% increase) among major indices.

On the M&A side, there were over 2,000 SaaS transactions, making 2023 the second strongest year on record for SaaS M&A, only narrowly trailing 2022.

4. While AI garnered a lot of the hype in 2023, an equally important story is the strength and resilience of the software ecosystem. 2023 was another proof point that SaaS is “here to stay.”

4 Macroeconomic Outlooks for 2024: Inflation, interest rates, employment, growth and politics

1. Inflation continues to decrease, finishing 2023 at 3.4% YOY compared to December 2022. The underlying core CPI, which strips out volatile food and energy prices, measured 3.9% in December 2023, its lowest YOY change since May 2021. Though additional cooling is still needed for inflation to reach the 2% annual target the Federal Reserve sets, the progress made in 2023 is encouraging.

2. The prospect of the Federal Reserve cutting interest rates is coming into focus. They will closely watch inflation and the unemployment rate (which remains solid at 3.7%) as it plots the course through this year.

The timing of potential cuts will greatly impact publicly traded SaaS stocks and the M&A markets, as the potential for a lower-cost borrowing environment would be a welcome sight to these markets.

3. What about a recession? 2023 growth is now expected to come in between 2 and 3%.

GDP growth is expected to decline slightly in 2024 but remains positive at around 2%. This scenario avoids a recession altogether and supports a healthy economic environment.

A scenario in which the U.S. beats GDP estimates again provides an upside case for publicly traded SaaS stocks in 2024. This possibility is further bolstered by the recently released Q4 GDP data, in which the U.S. GDP grew 3.3%, beating consensus estimates.

4. The economy will be a primary focus on the 2024 campaign trail. However, the reality remains that the Federal Reserve dictates monetary policy independent of political election cycles.

Election risk is still present due to the divisive nature of the current U.S. political environment,

albeit much less discussed than during the last cycle.

Globally, geopolitical risks include regional conflicts in the Middle East and their impact on oil prices, the ongoing Russia-Ukraine war, and tensions between China and Taiwan.

To read the details of Software Equity Group’s 2024 SaaS Report, click here.

The turbulent markets of 2022-2023 and volatility in the M&A environment has brought the topic of valuation to the forefront in many of our discussions with founders and investors.

Regardless of market ups and downs, the factors that are most impactful to valuation remain relatively constant, with some standards changing with market cycles as witnessed over the past decade. Safe to say, valuation continues to be both art and science.

Allied Advisers put together this article as a refresher on some of the most important valuation factors in the current market for technology companies; we hope our report also services as broad guidance to founders, executives and investors in achieving an optimal valuation outcome for their business.

It is often said that valuing a business is more an art than a science. Another assertion is that

valuation is in the eye of the beholder, akin to beauty. There is truth in both these statements since

enterprise valuation is impacted by several variables, not all of which can be quantified, and

perception of future prospects of a business can be quite different depending on the biases of the

evaluator.

Regardless of this sense of mystery and fuzziness about valuation, there are several fundamental

factors that influence the value of a technology business.

In this article, we cover five important elements that have a distinct bearing on the valuation of technology companies, noting that many of these factors apply to businesses in other sectors as well.

1) Scarcity in a Large Market

A business that is the only player, or one of just a few players, in a large end market is likely going

to be seen as being valuable since there are limited substitutes for the scarce solution offered by

that company. It is simple supply-demand dynamics – when there is clear demand for a product in

short supply, the price of that product goes up.

2) Significant Differentiation from Competitors

Often referred to as “USP” or unique selling proposition, differentiation of a technology business is

important to valuation since it creates scarcity and sets the business apart from its competition.

Differentiation may come from unique product features, ability to address challenging use cases,

performance metrics, superior UI design, ease of deployment and use, economic value to the

customer (time to value, ROI), etc.

3) Growth vs. Profit Margin and Rule of 40; Capital Efficient Growth

In the frothy market prior to COVID that eventually peaked in 2021, hypergrowth was the mantra

for technology companies. Businesses that grew at breakneck pace with no heed to bottom line

profitability attracted nosebleed valuations in private funding rounds. A popular performance

measure of software companies called Rule of 40 (revenue growth rate + profit margin > 40%) was

highly biased towards revenue growth; companies that grew at 100% with -50% operating margin

(R40 metric = 50%) were highly valued due to their growth, albeit with poor profit margins, easily

attracted capital.

4) Revenue Model and Gross and Net Revenue Retention Metrics

Business models typical to technology product/platform companies are subscription, licensing or

transactional. Subscription models provide recurring revenue (monthly or annually), licensing is

usually a one-time fee, and the transactional model provides revenue per transaction.

5) Customer Profile and Concentration

Companies that have large enterprises as customers are more likely to be able to expand revenues

from such clients given the numerous groups within large organizations and bigger budgets for

vendors. In contrast, having small/medium (SMB) customers limits the opportunities for large

contracts and wallet share expansion given limited budgets. For these reasons, companies with an

enterprise customer base have traditionally been viewed more favorably by investors compared to

businesses serving SMB clients.

To read the full report, click here.

Ravi Bhagavan is a Managing Director at Allied Advisers

Ofer Klein is a decades-long Israeli Defense Force helicopter pilot and avid kitesurfing enthusiast who likens the adrenaline rush to being a founding CEO of a thriving security startup. It’s this unique background and experience that have been key to Ofer’s leadership style and Reco’s success.

Ofer and his fellow co-founders developed the platform and AI algorithm to use for counterintelligence for the Israeli government, and decided to productize the platform in 2020, which lead to the birth of Reco.ai. Now, Reco.ai is a leading organization focused on safeguarding organizations with its modern, AI-driven SaaS security offering.

M.R. Rangaswami: What security concerns are not being talked about enough today?

Ofer Klein: There are a few. Security Keys Are Replacing Multi-Factor Authentication (MFA) – MFA is a common method of adding a second layer of security onto SaaS applications (in addition to a password). But, MFA is not the only security boundary, as SaaS applications are beginning to use security keys for secondary verification. Security keys are physical devices that use a unique PIN only available on that device to authenticate.

Another is Microsoft 365 and Okta Cyber Attacks. A security concern is maintaining the security of core SaaS applications, such as Microsoft 365 and Okta, as they have more cyber threats because they are foundational to making SaaS programs run, potentially becoming the next SolarWinds. Despite growing security threats, these technologies have experienced an uptick in adoption. The security built into Microsoft 365 E5 and Okta isn’t enough, however, to keep the application and organizational data stored in it secure, prompting organizations to look for dedicated SaaS security solutions.

M.R.: Why is securing SaaS applications so important?

Ofer: During the pandemic, cloud collaboration tools fundamentally changed the way modern organizations work. Enterprises today are adding new applications to their technology stack at an unprecedented rate, using an average of 371 SaaS applications. This dramatic increase has resulted in an elevated demand for a security solution that provides full visibility into everything connected to a company’s SaaS environment, and at the same time, ensures it complies with regulations.

Attempting to secure new SaaS tools with techniques that were developed for legacy on-premise systems restricts collaboration and misses a broad range of security events. Only by understanding the complete business context of an interaction can security analysts identify and interpret potential threats, and also determine the best and most efficient way to respond.

M.R.: What role does AI play in solving SaaS security?

Ofer: Like many sectors today, AI is revolutionizing the security industry. Leveraging AI to identify and address security vulnerabilities is rapidly growing and very effective. This is especially true for companies adding new generative AI applications into their technology ecosystems, as this can expose an organization to added risk due to the sharing of emails, recorded calls, and other data. Incorporating AI models, techniques, and processes like Large Language Models (LLMs), Knowledge Representation Learning, and Natural Language Processing (NLP) give companies greater visibility and allows them to discover potentially risky events (such as the improper use to AI tools) and be alerted to data exposure, misconfigurations, and mispermissions around a user.

The incredibly fast adoption of generative AI tools has led to new data risks, such as privacy violations, fake AI tools, phishing and more. As a result, organizations need to establish AI safety standards to keep their customer and employee data safe. Having a SaaS security solution that can identify connected generative AI tools is critical.

AI is foundational to our SaaS security offering and enables enhanced functionality and effectiveness. Our proprietary and patented AI algorithm powers our Identities Interaction Graph, which correlates every interaction between people, applications, and data, and then assesses potential risk from misconfigurations, over-permission users, compromised accounts, risky user behavior, and also the use of generative AI applications.

One-third of organizations regularly use generative AI applications in at least one function, making it critical for SaaS security platforms to have the ability to discover anomalous behavior for both humans and machines and gain even deeper proactive threat mitigation.

M.R. Rangaswami is the Co-Founder of Sandhill.com

If you’re looking to gather insights beyond the mainstream titles, here are nine books that will bring fresh perspectives that will add to your leadership skills in 2024. From tech…

Sanjay Sathé, Founder & CEO of SucceedSmart, is no stranger to disrupting established industries. Previously, Sathé spearheaded RiseSmart’s evolution from a concept based on his personal experiences into a major disruptor in the $3B outplacement industry, becoming the fastest-growing outplacement firm in the world. In September 2015, RiseSmart was acquired for $100M by Randstad.

Launching SucceedSmart, a modern executive recruitment platform with a unique blend of proprietary, patent-pending AI and human expertise, was a culmination of Sathé’s 15 years as a candidate of executive search and 15 years as a buyer of executive search. It was clear that the industry was living in the past and ripe for disruption.

While many organizations across the broader HR market were embracing technology, the executive search industry continued to operate almost entirely offline and saw a lack of innovation and technology adoption over the past 50 years.

Sathé invested time in researching both executives and corporate HR leaders to confirm his thinking and when he received a resounding “yes” to the hypothesis, he dove in to launch SucceedSmart in 2020. SucceedSmart is now on a mission to modernize leadership recruiting for director to C-level talent and fill complex leadership roles with unmatched agility, accuracy, and affordability, while promoting diversity and transparency

M.R. Rangaswami: How can artificial intelligence (AI) positively impact HR leaders and teams?

Sanjay Sathé: Businesses across industries have increasingly adopted AI in recent years. It’s no longer a question of whether to embrace AI technology—but when and how.

Contrary to the misconception that AI will eliminate jobs, AI can empower CHROs, talent partners, talent acquisition teams, hiring teams and other employees to work more strategically, and improve diversity and inclusivity. By automating routine tasks, AI also frees up time for HR professionals to focus on the “human” side of human resources and build relationships with candidates and employees.

From an HR perspective, AI automates tasks such as talent sourcing, resume screening, and interview scheduling, and helps centralize all candidate information in a streamlined platform. AI technology also unlocks insights about the hiring process and candidate experience to drive improvements over time. Leveraging AI also minimized conscious and unconscious biases in the hiring process by matching candidates with jobs that align with their accomplishments, skills, and experience.

M.R.: What are some of the top challenges in executive recruiting today and how can businesses overcome them?

Sanjay: Leadership has an immeasurable impact on business success and executives are among the most critical employees at any organization. Yet, despite increased turnover, business velocity, and competition, executive search has remained devoid of innovation and technological advancements for half a century.

The traditional executive search process can take several months—leading to a poor candidate experience, as well as lost productivity and revenue as roles go unfilled. The approach is transactional, exclusionary, clubby, time-consuming, and expensive. Not only is the pricing exorbitant, but in retained search, corporations may have to pay all those fees and still not get a candidate. And the same executives are often passed around between firms, leading to a limited talent pool.

Embracing modern executive recruitment technology can help address these challenges, decreasing total time to hire and overall hiring costs, and enable organizations to build more diverse leadership teams. It can also support diversity initiatives by focusing specifically on accomplishments and removing demographic and other personally-identifiable information that may lead to unconscious bias during the hiring process.

M.R.: How can businesses effectively build their leadership pipelines given the Silver Tsunami, meaning the wave of Baby Boomer employees retiring in the coming years?

Sanjay: More than 11,000 Baby Boomers reach retirement age each day and more than 4.1 million Americans are expected to retire each year through 2027.

Traditional executive search primarily focuses on serving organizations—not executives. Firms often wait for executives to reach out to them and the same executives are often passed around between companies, resulting in a limited talent pool. As an increasing number of executives retire as part of the Silver Tsunami, traditional candidate networks are becoming even smaller.

To improve talent sourcing across all roles amid the Silver Tsunami, organizations can turn to AI-powered candidate recruitment technology—rather than relying on personal connections. This approach enables organizations to be more proactive about succession planning by identifying and nurturing internal talent while simultaneously scouting for external candidates.

A modern executive recruitment platform can support the growing and urgent need to fill executive roles as more workers retire, by enabling corporations to build diverse pipelines of qualified executives and reduce total hiring time to a matter of weeks, compared to four to six months with traditional executive search firms.

M.R. Rangaswami is the Co-Founder of Sandhill.com

What does 2024 hold for industrial tech? PitchBook’s latest Emerging Technology Research looks ahead to what could be in store for verticals like agtech, clean energy, and more.

Here is a summary of Pitchbook’s Outlook on Agtech, the Internet of Things, Supply Chain Tech, Carbon & Emissions Tech, and Clean Energy.

AGTECH: Autonomous farm robots will see a major increase in adoption.

The anticipated surge in adoption of autonomous farm robotics in 2024 is driven by a convergence of compelling factors addressing critical challenges within the agriculture sector.

First, the persistent global labor shortages in agriculture are pushing farmers to seek alternative solutions, with farm automation offering a viable response to mitigate the impact of diminishing workforce availability.

Second, technological advancements, particularly in artificial intelligence, sensors, and automation, have matured to a point where the cost-effectiveness and reliability of robotic systems make them increasingly attractive for widespread adoption.

Third, the imperative to optimize resource use, reduce operational costs, and enhance overall farm efficiency aligns seamlessly with the capabilities of modern farm robotics, positioning them as essential tools for a more sustainable and productive agricultural future.

Fourth, the rise of Robotics-as-a-Service models is proving instrumental in easing upfront costs associated with adopting these technologies.

Fifth, pilot studies have successfully demonstrated the effectiveness of farm robotics, and companies are now transitioning to full-scale commercialization, making 2024 a pivotal year for the integration of these technologies into mainstream agricultural operations.

INTERNET OF THINGS: Outlook: Private 5G startups will produce a unicorn valuation in a late-stage deal or acquisition.

Unicorn valuations have been rare in the Internet of Things (IoT) industry with only two VC deals for Dragos and EquipmentShare valuing companies over $1.0 billion in North America and Europe in 2023. 5G startups have not reached this threshold despite achieving rapid valuation growth for midstage companies and a $1 billion exit in the space in 2020 for Cradlepoint. Numerous technical and commercial barriers to entry will ease over the coming year and revenue growth is on pace to accelerate.

The fundraising timelines of private leaders align with this trend, creating investment opportunities for growth-stage and corporate VC investors, along with telecommunications acquirers.

SUPPLY CHAIN TECH: Drone deliveries will go commercial in the US with more funding and investor interest in the space.

The Federal Aviation Administration (FAA) regulates the drone delivery market with a primary consideration on safety. To date, drones have been subject to a restriction called beyond visual line of sight (BVLOS) meaning an operator must have the drone within sight at all times when it is flying.

This restriction represents a significant (some might say insurmountable) hurdle for the development of a drone delivery marketplace. The cost of an operator visually tracking and monitoring every delivery via drone is prohibitive.

The FAA has stated that it wants to integrate drones into common airspace, and issued a number of exemptions to the BVLOS rule to startups and larger companies over the course of 2023.

These exemptions open the door for the market to finally develop.

CARBON & EMISSIONS TECH: Demand for carbon credits will recover, following uncertainty in 2022 and 2023.

Voluntary carbon markets (VCMs) have been under significant scrutiny in recent years, particularly carbon credits based on avoidance—rather than removal—of emissions.

Multiple different sets of standards, and the perceived risk associated with low-integrity credits, has been reducing the overall traded volumes of carbon credits, and has been pushing buyers toward removal-based credits that are easier to prove the integrity of.

New independent standards are emerging, and while there are no obligations for credit providers to follow them, they provide the means to show high integrity and reassure buyers.

CLEAN ENERGY: US clean hydrogen technology companies will become acquisition targets.

Low-carbon hydrogen is seen as a key component of global decarbonization efforts, particularly for certain industrial applications and heavy transportation. Earlier this year, the US Department of Energy allocated $7 billion to a program to develop seven hydrogen hubs across the US, to produce, store, and distribute hydrogen.

Companies involved in these hubs are varied, including energy and oil & gas companies that have experience with large-scale energy projects, but will likely look to close technology gaps through acquisitions.

To read PitchBook’s full report, click here.

PitchBook is a Morningstar company providing the most comprehensive, most accurate, and hard-to find data for professionals doing business in the private markets.

Jason Lu, the founder of CECOCECO, began his journey in the LED display industry in 2006 by creating ROE Visual. His commitment to perfection and a deep understanding of product quality quickly led to ROE Visual becoming a top brand within the industry.

As an innovator in the field, Jason has consistently been a notable figure in the industry and is never content to rest on past achievements. In 2021 he sought new challenges and founded CECOCECO. With this venture, Jason embraced the idea that LED displays could be more than functional tools; they could integrate technology and aesthetics to create emotionally engaging experiences.

Jason’s reputation for producing high-quality products is built on years of experience and industry knowledge. His dedication to product development was evident in the launch of ArtMorph by CECOCECO. After two years of dedicated work and maintaining high standards, Jason and his team successfully introduced this innovative product to the market.

Under Jason’s leadership, CECOCECO is more than a brand; it’s a testament to ongoing innovation in how the world experiences and interacts with light and display technology.

M.R. Rangaswami: What were the key insights or experiences that led you from ROE Visual to creating CECOCECO, and how do these past experiences shape your current vision?

Jason Lu: I’ve come to recognize that traditional LED displays, while functional, are not universally applicable to every space and often clash with sophisticated designs. My ambition is to develop products that harmoniously blend functionality with aesthetic appeal. I firmly believe that innovation is fueled by pressure. ROE is currently experiencing stable growth, prompting me to initiate transformative changes.

Reflecting on my past experiences, I’ve gained a profound understanding of the path to success and the attitude required for it. I’ve learned that success is not an overnight phenomenon. ROE took 17 years to reach its current stature, reinforcing my belief in the ‘slow and steady wins the race’ philosophy. I don’t equate financial gain with success. While survival is crucial, it’s not the epitome of success. My vision for CECOCECO is to relentlessly pursue excellence in our products, continuously innovate, and be a source of inspiration for the industry and the world at large.

M.R.: How does CECOCECO innovate in the LED lighting and display industry, and what future advancements do you foresee in this space?

Jason: At CECOCECO, our focus is on pioneering solution-based innovation. While similar products and projects exist, we question their viability and sustainability. Our approach involves crafting systematic solutions with an unwavering commitment to quality in every aspect, from the consistent output of our products to the intricacies of our manufacturing process. This is far more than a mere mechanical production; it necessitates a blend of human creativity and precision control. Our development and manufacturing stages demand extensive manpower, embodying a level of craftsmanship of the highest order. CECOCECO’s mission is to transform previously disjointed elements into cohesive, sustainable systems.

Looking ahead, we aim to diversify our product range. This includes offering a wider variety of resolutions and shapes and innovating with flexible screen technologies. Our goal is to provide a more comprehensive and diverse range of solutions to meet the evolving needs of our customers.

M.R.: What emerging trends in LED technology and lighting design do you find most exciting, and how is CECOCECO preparing to integrate these trends into future products?

Jason: The landscape of LED lighting is undergoing two significant transformations. First, there’s a notable shift from point light sources to surface light sources, with Chip-On-Board (COB) technology gaining increasing popularity. This evolution marks a fundamental change in how we perceive and utilize LED lighting. Secondly, the realm of lighting design is witnessing a surge of creativity. It’s transcending beyond mere color shifts and overlays; dynamic, imaginative light effects are becoming the norm, adding a refreshing dimension to lighting.

In response to these trends, CECOCECO is exploring integrating COB technology into our products to harness its unique effects. Lighting design isn’t just an aspect of our product; it’s a cornerstone. We’re committed to experimenting with various surface materials and designs to unlock new potential in creative lighting. Furthermore, we’re enthusiastic about collaborating with leading lighting designers. We aim to conceive and develop even more captivating lighting projects by merging our technological prowess with their creative flair.

M.R. Rangaswami is the Co-Founder of Sandhill.com

According to Ran Ronen, 2024 will be the year in which technology leaders innovate by example to help create more inclusive experiences and broaden the base of potential users and customers of their technology services and solutions by prioritizing digital accessibility.

Accessible websites and online experiences offer businesses a range of benefits, from ensuring compliance with regulatory requirements and industry best practices, to more users and customers accessing the site, to improved website SEO and brand trust and credibility. Prior to advancements made possible with AI, the technical process of ensuring a website operates as accessible has been a difficult goal for many website owners to achieve due to challenges to manage end to end accessibility compliance.

Ran is the Co-Founder and CEO the world’s first no-code web accessibility solution designed to help businesses of all sizes meet regulatory compliance. This conversation was an enlightening one as he and I spoke about the positive shift he’s seeing in the tech field to embrace more accessibility guidelines as best practices.

He is the Co-Founder and CEO of Equally AI, the world’s first no-code web accessibility solution designed to help businesses of all sizes meet regulatory compliance.

M.R. Rangaswami: What is the state of digital accessibility; and why, in today’s tech-driven world do you think adoption is still lagging to make accessibility a priority in user/customer experience?

Ran Ronen: The state of digital accessibility is evolving, yet its integration into mainstream tech remains slower than it should be. Although AI-driven accessibility tools are emerging, many companies still see accessibility as a complex and costly process, often overlooking or delaying it in favor of rapid development. This overlooks the opportunity to appeal to a wider, more diverse customer base and enhance product usability for everyone from the onset.

Slow adoption also stems from limited awareness of diverse user needs and the wider benefits of accessibility beyond legal compliance. There’s a critical need for tech leaders to see accessibility not just as a necessity for individuals with disabilities, but as a key factor in improving overall user experience and innovation, which in turn boosts brand reputation and customer satisfaction.

M.R.: What are some challenges faced by organizations in managing the technology implementation side of digital accessibility?

Ran: Organizations implementing digital accessibility often face several challenges, including a lack of in-house expertise on accessibility standards and implementation, which makes integrating these practices into existing tech frameworks difficult. Resource allocation is another challenge, as accessibility often competes with other business priorities and can be seen as an additional cost. Also, ensuring consistent accessibility across a diverse range of products and platforms presents a scalability challenge, requiring a strategic approach to meet various tech and user needs effectively.

M.R.: As an innovator in the space, what is your hope for the impact of AI in making more companies and their offerings more digitally inclusive?

Ran: As an innovator in the digital accessibility space, my aspiration is that AI will enable a shift in perspective, where digital accessibility becomes not just an aspiration but a practical reality for more companies, especially small and medium-sized businesses. This will help them proactively create accessible products and services, which not only enhances the user experience for all but also opens up new markets and opportunities for innovation.

M.R. Rangaswami is the Co-Founder of Sandhill.com

Chris Lennon believes in bringing great people to great countries to create great companies, and that’s exactly what he’s doing as the President of Empowered Startups. A lawyer by trade,…

Ankit Sobti is co-founder and CTO for Postman, the world’s leading API Platform. Prior to joining Postman, Ankit worked for Adobe and Yahoo!, where he served as a senior software engineer. In his current role, Ankit focuses on product and development, leading the core technology group at Postman.

A key focus for this Q&A are the findings from a recent global survey Ankit and the Postman team published, tracking the most important trends around API use in large enterprises.

M.R. Rangaswami: APIs are critical tools for enterprise success, but should they also be considered products?

Ankit Sobti: Thinking about APIs as products helps to understand and articulate that APIs, like any other item you’d typically call a product – a website, a mobile app, a physical product – are required to be built with a consumer-driven mindset.

This requires an understanding of who the consumers are, what problems are they trying to solve, why is it a problem in the first place, what else are they doing to solve this problem–and then consciously and deliberately designing a solution to this problem exposed through the interface of an API.

And like any other product, APIs also need to be packaged, positioned, priced, distributed, and iteratively improved to evolving consumer needs.

Postman’s 2023 State of the API Report, which surveyed over 40,000 people found 60% of the API developers and professionals view their APIs as products – which I think is a good signal that this realization is well underway. And it makes sense that APIs are increasingly seen as products, serving both internal and external customers.

But how does this view vary by industry and company size? And how much revenue can APIs generate? It turns out that the larger the company, the likelier it is to view its APIs as products. At companies with over 5,000 developers, 68% of respondents said they considered their APIs to be products. At the other end of the spectrum were companies with fewer than 10 employees. There, just 49% of respondents viewed their APIs as products.

M.R.: Are APIs actual revenue generators now for companies?

Ankit: Yes, APIs are increasingly unlocking new streams of revenue and business opportunities for companies. In some of the more traditional industries with lower margins for example, we are increasingly seeing APIs being used as a high margin revenue stream. And there are numerous examples now of companies where the primary product being sold is the API.

APIs that package insights or key capabilities and can be used to drive strategic partnerships, or allowing companies to become platforms on top of which others can build. We are seeing examples of this ranging from small development shops all the way to large enterprises.

This is something we also saw in our survey, with 65% of the respondents affirming their APIs generate revenue, and almost 10% of companies with money-making APIs said their APIs generated more than three-fourths of total revenue.

M.R.: Does an API-first approach impact revenue?

Ankit: API-first companies are defined as those that use APIs as the building blocks of their software strategy. APIs bind together not only the internal components of an organization, but also pave the way for seamless external collaboration. And thinking in terms of these building blocks, an API-first approach allows for easier externalization of the capabilities that APIs provide, and subsequently create easier paths for revenue.

In addition, we believe that API-first companies have superpowers that foster happier developers and a healthier business ecosystem. In our customer base, we work with companies across a broad range of industries – and APIs generate significant amounts of revenue, unlock new business opportunities, and drive ecosystem expansion through partnerships.

And for companies with APIs, it’s worth weighing how much to invest in them, and adopting an API-first approach. These decisions may have a tangible impact on the bottom line.

M.R. Rangaswami is the Co-Founder of Sandhill.com

Allied Advisers has just released its inaugural report on product led growth (PLG).

Product-Led Growth (PLG) is an innovative customer-centric business strategy that employs user-friendly products to acquire, retain, and expand the customer base, reducing the reliance on traditional sales and marketing.

As software users, we have had magical experiences with products that allow us to independently explore, test, purchase and expand usage without intervention from the product vendor’s sales team; these PLG strategies have been utilized successfully by leading SaaS companies such as Dropbox, Zoom, Klaviyo and Slack among others. This contrasts with sales led growth (SLG) that relies on direct sales teams to hunt and harvest product sales opportunities.

This report covers insights on how to develop a PLG strategy from Dharshan Rangegowda, a former Allied Advisers client who grew ScaleGrid via a PLG strategy before raising a growth round with a mid-market PE firm.

Additionally, the report provides details on transactions of PLG companies as well as profiles of certain PLG businesses in different verticals, indicating significant differences in operational efficiencies when adopting a PLG model.

A 5x SaaS entrepreneur, Godard Abel is CEO of G2, the world’s largest and most trusted software marketplace, which he co-founded in 2012. He is also Executive Chairman of ThreeKit, a leading 3D visualization technology company, and Logik.io, a next generation configuration technology.

Previously, Godard served as CEO of SteelBrick which was acquired by Salesforce in 2016. Prior to SteelBrick, Godard co-founded BigMachines, where he served as CEO and built it into a leading SaaS provider which was acquired by Oracle in 2013. He also served as a GM at Niku prior to its IPO in 2000 (and subsequent acquisition by CA).

Before entering the technology industry, Godard consulted for McKinsey & Company and advised leading manufacturers in the U.S. and Germany on strategy development and business process improvement. Godard was a Finalist for EY Entrepreneur of the Year in 2019, named to the Tech 50 list by Crain’s Business Chicago in September 2014, and to the Chicago Entrepreneur Hall of Fame in 2011. He earned an MBA from Stanford University and both a B.S. and M.S. in engineering from the Massachusetts Institute of Technology.

As you can tell by our conversation, Godard is not only an innovator and leader in the tech world, but he is also very skilled at sharing a lot of information in few words.

M.R. Rangaswami: How is software buying changing?

Godard Abel: B2B buyers now expect consumer-like shopping experiences, where they can conduct research and make purchases quickly, conveniently, and on their own terms. This means expensive software solutions can be bought with a credit card, and the buyer conducts research on review sites and other peer communities. In fact, G2 research finds that 67% of global B2B software buyers usually engage a salesperson once they have already made a purchasing decision.

M.R.: How does AI impact this shift in software buying behavior?

AI will only accelerate the ongoing shift to self-serve software research and buying, delivering modern digital buyer experiences. The ability of AI to provide immediate, data-driven insights is a key driver of this shift. With this in mind, software vendors have an opportunity to lean into AI to meet buyers’ preferences for speed, eliminating friction in the software buying journey.

M.R.: What role does G2 play in this evolving software landscape?

Godard: G2 has over 2.4 million verified reviews on 150,000+ products and services. All 1 billion knowledge workers around the world need software and they’re coming to G2 to research it. With our massive dataset on B2B software and the most traffic from software buyers, G2 is uniquely positioned to power software buying and selling in the age of AI.

Earlier this year, we introduced Monty, the first-ever AI-powered software business assistant built on OpenAI’s ChatGPT. Previously, a buyer would visit G2.com and search for the type of software they were looking for – CRM, for example. However, not every buyer knows exactly what they need.

With Monty, you can now describe the business challenge you’re looking to solve and have a conversation. Powered by G2’s extensive dataset, Monty can recommend the best software solutions for your particular need – making the process of research software faster, easier, and more effective.

M.R. Rangaswami is the Co-Founder of Sandhill.com

What does the future of customer experience look like with generative AI?

According to Knowbl’s CEO and Co-Founder, Jay Wolcott, it’s going to critical to understand the risk in implementing AI solutions and the requirements for what “enterprise-ready conversational AI” means.

In this conversation, Jay sheds light on how this innovative technology redefines customer experience, making interactions more seamless, convenient, and efficient.

M.R. Rangaswami: What exactly is “BrandGPT,” and how does it differ from traditional conversational AI technologies?

Jay Wolcott: BrandGPT is a revolutionary Enterprise Platform for Conversational AI (CAI) built leveraging large language models (LLMs) from the ground up. Legacy virtual assistance platforms built upon BiLSTNs and RNN frameworks like the speed, ease, and scalability that LLMs can offer through few-shot learning.

Through the release of this all-new approach, CAI can finally meet its potential of creating an effortless self-service experience for consumers with brands. The proprietary AI approach Knowbl has designed within BrandGPT offers truly conversational and contextual interactions that restrict the limits of Generative AI from uncontrollable risks.

This new approach is driving tons of enterprise excitement for new levels of containment, deflection, and satisfaction across digital and telephony deployments. Beyond the improved recognition and conversational approach, Knowbl’s platform allows brands to launch quickly, leverage existing content, and improve the scalability of capabilities while reducing the technical effort to manage.

M.R.: What emerging trends do you foresee shaping the future of conversational AI and customer experience, and how can businesses prepare for these developments?

Jay: In 2024 we plan to overcome customer frustration with brand bots and virtual assistants, ushering in a new era of effortless and conversational experiences powered by advanced language models.

Brands that embrace LLMs for customer automation early on will establish a competitive advantage, while those who lag will struggle to keep up. Although many organizations are still in the experimental phase of using GenAI for internal purposes due to perceived risks, leading brands are boldly venturing into direct customer automation, reimagining digital interfaces with an “always-on” brand assistant.

We also predict 2024 to be the year that bad bots die. New expectations of AI will lead to frustrated consumers when dealing with legacy bots, and a trend in attrition versus retention will appear.

M.R.: What complexities do multinational companies face when implementing AI-driven solutions, and how can they navigate the challenges to ensure successful adoption across diverse markets?

Jay: Multinational companies encounter a myriad of complexities when implementing AI-driven solutions stemming from the diversity of markets they operate. One significant challenge lies in reconciling varied regulatory landscapes and compliance requirements across different countries, necessitating a nuanced approach to AI implementation that adheres to local regulations.

Additionally, cultural and linguistic diversity poses a hurdle, as AI solutions must be tailored to resonate with the unique preferences and expectations of diverse consumer bases. To successfully navigate these challenges, companies must prioritize a robust localization strategy, customizing AI solutions to align with each market’s specific needs and cultural nuances.

Collaborating with local experts, remaining vigilant of regulatory changes, and fostering open communication with stakeholders is essential for multinational companies to achieve successful AI adoption across diverse markets.

M.R. Rangaswami is the Co-Founder of Sandhill.com

John Hayes is CEO and founder of autonomous vehicle software innovator Ghost Autonomy.

Prior to Ghost, John founded Pure Storage, taking the company public (PSTG, $11 billion market cap) in 2015. As Pure’s chief architect, he harnessed the consumer industry’s transition to flash storage (including the iPhone and MacBook Air) to reimagine the enterprise data center inventing blazing fast flash storage solutions now run by the world’s largest cloud and ecommerce providers, financial and healthcare institutions, science and research organizations and governments.

Like Pure, Ghost uses software to achieve near-perfect reliability and re-defines simplicity and efficiency with commodity consumer hardware. Ghost is headquartered in Mountain View with additional offices in Detroit, Dallas and Sydney. Investors including Mike Speiser at Sutter Hill Ventures, Keith Rabois at Founders Fund and Vinod Khosla at Khosla Ventures have invested $200 million in the company.

Now, let’s get into it, shall we?

M.R. Rangaswami: How does the expansion of LLMs to new multi-modal capabilities extend their application to new use cases?

John Hayes: Multi-modal large language models (MLLMs) can process, understand and draw conclusions from diverse inputs like video, images and sounds, expanding beyond simple text inputs and opening up an entirely new set of use cases from everything from medicine to legal to retail applications. Training GPT models on more and more application specific data will help improve them for their specific task. Fine-tuning will increase the quality of results, reduce the chances of hallucinations and provide usable, well-structured outputs.

Specifically in the autonomous vehicle space, MLLMs have the potential power to reason about driving scenes holistically, combining perception and planning to generate deeper scene understanding and turn it into safe maneuver suggestions. The models offer a new way to add reasoning to navigate complex scenes or those never seen before.

For example, construction zones have unusual components that can be difficult for simpler AI models to navigate — temporary lanes, people holding signs that change and complex negotiation with other road users. LLMs have shown to be able to process all of these variables in concert with human-like levels of reasoning.

M.R.: How is this new expansion impacting autonomous driving, and what does it mean for the “autonomy stack” developed over the past 20 years?

John: I believe MLLMs present the opportunity to rethink the autonomy stack holistically. Today’s self-driving technologies have a fragility problem, struggling with the long tail of rare and unusual events. These systems are built “bottoms-up,” comprised of a combination of point AI networks and hand-written driving software logic to perform the various tasks of perception, sensor fusion, drive planning and drive execution – all atop a complicated stack of sensors, maps and compute.

This approach has led to an intractable “long tail” problem – where every unique situation discovered on the road requires a new special purpose model and software integration, which only makes the total system more complex and fragile. With the current autonomous systems, when the scene becomes overly complex to the point that the in-car AI can no longer safely drive, the car must “fall-back” – either to remote drivers in a call center or by alerting the in-car driver.

MLLMs present the opportunity to solve these issues with a “top-down” approach by using a model that is broadly trained on the world’s knowledge and then optimized to execute the driving task. This adds complex reasoning without adding software complexity – one large model simply adds the right driving logic to the existing system for thousands (or millions) of edge cases.

There are challenges implementing this type of system today, as the current MLLMs are too large to run on embedded in-car processors. One solution is a hybrid architecture, where the large-scale MLLMs running in the cloud collaborate with specially trained models running in-car, splitting the autonomy task and the long-term versus short-term planning between car and cloud.

M.R.: What’s the biggest hurdle to overcome in bringing these new, powerful forms of AI into our everyday lives?

John: For many use cases, the current performance of these models is already there for broad commercialization. However, some of the most important use cases for AI – from medicine to legal work to autonomous driving – have an extremely high bar for commercial acceptance. In short, your calendar can be wrong, but your driver or doctor can not.

We need significant improvements on reliability and performance (especially speed) to realize the full potential of this technology. This is exactly why there is a market for application-specific companies doing research and development on these general models. Making them work quickly and reliably for specific applications takes a lot of domain-specific training data and expertise.

Fine-tuning models for specific applications has already proven to work well in the text-based LLMs, and I expect this exact same thing will happen with MLLMs. I think companies like Ghost, who have lots of training data and a deep understanding of the application, will dramatically improve upon the existing general models. The general models themselves will also improve over time.

What is most exciting about this field is the trajectory — the amount of investment and rate of improvement is astonishing — we are going to see some incredible advances in the coming months.

M.R. Rangaswami is the Co-Founder of Sandhill.com

Gerry Fan serves as the Chief Executive Officer at XConn Technologies, a company at the forefront of innovation in next-generation interconnect technology tailored for high-performance computing and AI applications.

Established in 2020 by a team of seasoned experts in memory and processing, XConn is dedicated to making Compute Express Link™ (CXL™), an industry-endorsed Cache-Coherent Interconnect for Processors, Memory Expansion, and Accelerators, accessible to a broader market.

In pursuit of expediting the adoption of CXL, Gerry and his teams have successfully introduced the world’s inaugural hybrid CXL and PCIe switch – with a strategic approach that will make computers faster, smarter, and better for the environment.

M.R. Rangaswami: What barriers are being faced by AI and HPC applications that you are looking to

solve?

Gerry Fan: Next generation applications for artificial intelligence (AI) and high-performance computing

(HPC) continue to face memory limitations. The exponential demand these applications place on memory bandwidth has become a barrier to their further innovation and widespread adoption.

The CXL specification has been developed to alleviate this challenge by offering unprecedented memory capacity and bandwidth so that critical applications, such as research for drug discovery, climate modeling or natural language processing, can be delivered without memory constraints. By applying CXL technology to break through the memory bottleneck, XConn is helping to advance next-generation applications where a universal interface can allow CPUs, GPUs, DPUs, FPGAs and other accelerators to share memory seamlessly.

M.R.: How are you looking to solve the challenge with the industry’s first and only hybrid CXL

and PCIe switch?

Gerry: While CXL technology is poised to alleviate memory barriers in AI and HPC, a hybrid approach that combines CXL and PCIe on a single switch provides a more seamless pathway to CXL adoption. PCIe (Peripheral Component Interconnect Express) is a widely used interface for connecting hardware components, including GPUs and storage devices. Many traditional applications only need the interconnect capability offered by PCIe. Yet, emergingly, next-generation applications need the higher bandwidth enabled by CXL. System designers can be stuck with what approach will be the highest need.

XConn is meeting this challenge by offering the industry’s first and only hybrid CXL 2.0 and PCIe Gen 5 switch. Combining both interconnect technologies on a single 256-lane SoC, the XConn switch is able to offer the industry’s lowest port-to-port latency and lowest power consumption per port in a single chip – all at a low total cost of ownership. What’s more, system designers only have to design once to achieve versatile expansion, heterogeneous integration for a mix of accelerators, and fault tolerance with the redundancy mission critical applications require for true processing availability.

M.R.: In your view, how will XConn revolutionize the future of high-performance computing and AI

applications?

Gerry: Together with other leading CXL ecosystem players, XConn is delivering on CXL’s promise to support faster, more agile AI processing. This will deliver the performance gains AI and HPC applications

needs to accelerate research and innovation breakthroughs. It will also support greater energy efficiency and sustainability while helping to proliferate the “AI Everywhere” paradigm for smarter and more autonomous systems.

By helping to foster innovation and accelerate application use cases, XConn is delivering the missing link that will pave the way for unprecedented computing performance needed for tomorrow’s breakthroughs and technology advancements.

M.R. Rangaswami is the Co-Founder of Sandhill.com

When I sat with Razat he was clear on the imperativeness of the digitalisation in almost every organisation in every industry today, and that is what is leading to more than $3trn of annual spending on it.

His rationale behind digitalisation is sound but as he shared, studies show that much of that work is wasted-more than 40%, in some cases. This is largely due to the disconnect between strategy and what’s being executed by teams across the business.

As the leader in portfolio management and value stream management, this conversation Razat Gaurave shares why bridging the strategy-execution gap is essential for organizational and leadership transformation.

Would you believe that 40% of strategy work gets wasted in execution?

M.R. Rangaswami: What is the biggest challenge orgs face when connecting strategy to execution?

Razat Gaurav: The biggest challenge between strategy and execution is change-change from technology shifts, demographic shifts, and even generational shifts. It’s not a new phenomenon. But what has changed is that the pace of change is exponentially faster. Companies must be able to quickly analyse and adapt or evolve their strategy-and how those changes are executed-while still driving important business outcomes.

M.R. The research arm of The Economist, found 86% of executives think their organizations need to improve accountability for strategy implementation. What challenges do orgs face around measurement?

Razat: The key thing that gets in the way are data silos. Most organisations are swimming in data, yet most of that data is not usable to make decisions. Curating the relevant data to align with your priorities and objectives is critical to achieving accountability for strategy implementation.

What we find is that many organisations have three major gaps when they look at how they measure understanding of strategic goals.

First, organisations are measuring inputs or outputs, but they’re not measuring outcomes. Particularly when dealing with digital transformations, the business and technology teams must work together to focus on the outcome.

The second gap is around creating a synchronised, connected approach to objectives and key results-what some organisations call OKRs. Is leadership in alignment with the way an individual contributor gets measured? And does the individual contributor understand how they impact their leadership’s OKRs? That bidirectional synchronisation is key

And then the last piece is how the different functions in the organisation-finance, manufacturing, sales, and so on-align their OKRs to help achieve the company’s objectives and key results.

M.R.: What should leaders do first to narrow the strategy-execution gap?

Razat: My first piece of advice would be, take a deep breath because change is constant.

As organisations, as leaders, as individuais, we all have to be ready to adapt and change. But beyond taking that deep breath, there are three things I’d advise organisations to do.

First, figure out the three initiatives that wil actually move the needle. Second, define OKRs and an incentive structure for the outcomes you’re trying to achieve. Third, invest in systems that allow you to break out of those data silos to execute as one organisation, as one team.

M.R. Rangaswami is the Co-Founder of Sandhill.com

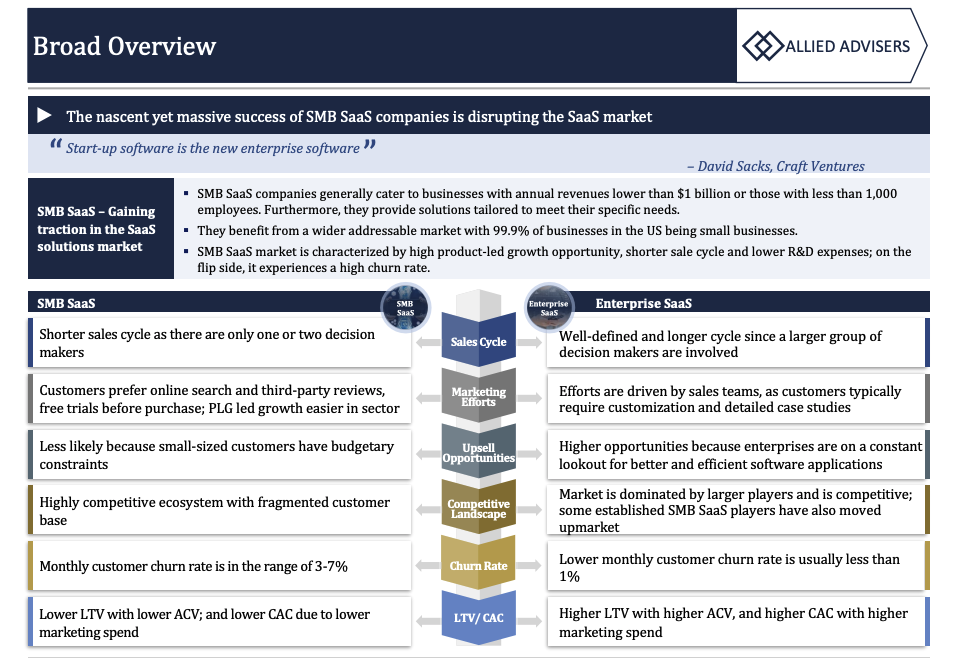

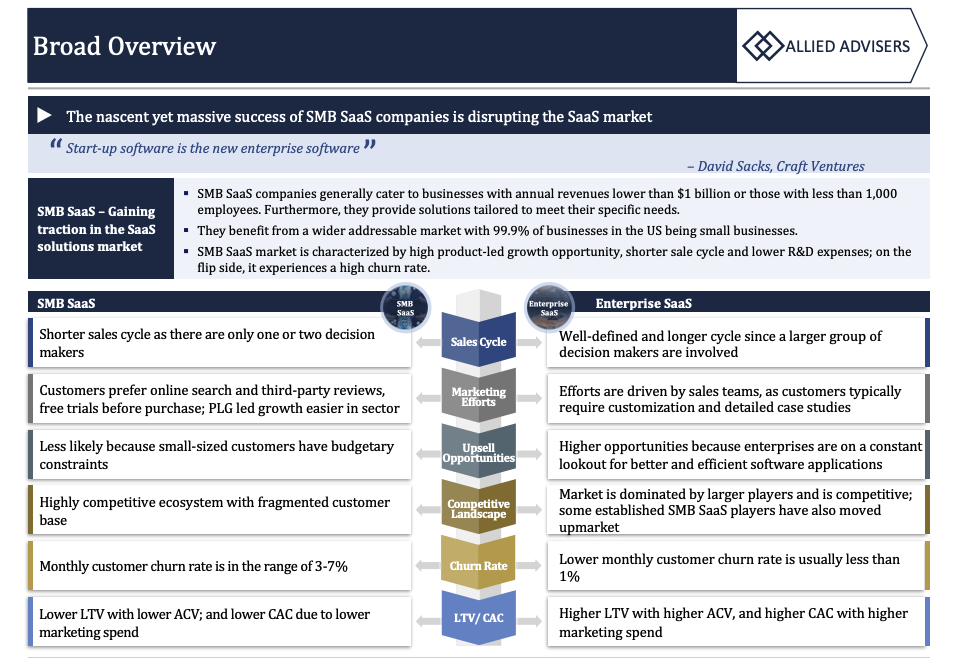

According to the recent update from Allied Advisers, SMB is the backbone of the US economy; 99.9% of all US businesses are in this segment. With rising SaaS adoption by small businesses for enhancing productivity, we remain optimistic on the long-term view of this sector.

While not surprisingly, SMB SaaS has higher churn than Enterprise SaaS, SMB SaaS has significantly better operational metrics when it comes to sales and marketing expense, R&D expenses, EBITDA margins and less sector competition. Our report covers nuances of SMB SaaS and we believe that SMB SaaS businesses continue to offer compelling opportunities for investors and buyers.

This particular Allied Advisers report has updated their SMB SaaS, highlighting a sector that has been growing with notable outcomes.

The report pulls from IPO’s of: Freshworks ($1.03B), Digital Ocean ($780M), Klaviyo ($576M) and notable exits such as Mailchimp’s acquisition ($12B+, one of the largest boot-strapped exits) and growth of private SMB SaaS companies like Calendly (last valued at $3B), Notion (last valued at $10B).

To see the full summary of Allied Adviser’s update, click here:

Gaurav Bhasin is the Managing Director of Allied Advisers.

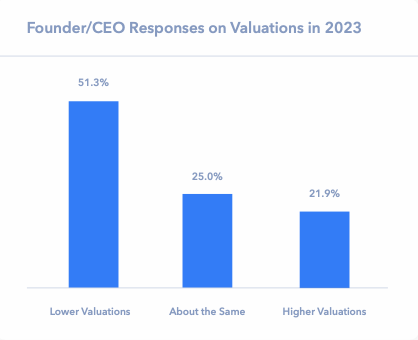

One year ago, Software Equity Group started their 2022 report on M&A trends with a simple observation: the stock market activity was not for the faint of heart. That view led to a much broader inquiry throughout the report into the myriad of dynamics at play and the associated impact on the software M&A market.

So how are Founders and CEOs exercising caution when considering M&A and liquidity events in the face of ongoing economic uncertainty, and is their restraint warranted?

To cut to the chase: it depends. For software businesses with the right profile (more on that later), there is tremendous opportunity in the current M&A landscape.

To better assess the state of the market, SEG analyzed data from our annual survey of CEOs, private equity investors, and strategic buyers, in addition to our quarterly report and our transactions.

HERE ARE SEG’S 4 TAKEAWAYS FROM THE RESEARCH: