ETL (Extract, Transform, and Load) — or more recently ELT (Extract, Load, and then Transform)— has been the workhorse of data-integration strategies for many years. The concept of consolidating data into a central data warehouse for analytical reporting requires a technology such as ETL to do the heavy lifting of data replication and data quality processing. However, as with most venerable technologies, the needs of the business have moved beyond the capabilities of most ETL products.

Inherently, ETL is a batch process, and there is no need to expand on why that is not good enough in many situations. ETL also follows a traditional Waterfall approach to design and implementation, where there is more pressure to be agile in business intelligence (BI) and development. Lastly, data sources and targets themselves have expanded beyond the traditional, structured world of databases, data stores, and data warehouses. Companies now realize that unstructured data — some of it from outside of the organization — holds tremendous value and is something they want to exploit.

Unfortunately, ETL tools were not designed for this new world. They were created to handle the bulk transfer of structured, mainly internal data from operational and transactional systems to a data warehouse.

So what do we do with these existing ETL processes? As companies move more towards these new data sources, the ETL processes become less effective and less relevant. Is it time to retire these elder ETL statesmen and do away with the complex and rigid ETL processes? Certainly not! Older ETL processes still work with the structured data that they were designed to work with and they still feed data warehouses that are used by hundreds, if not thousands, of users on a daily basis. They also continue to add value to the data in your organization. So why would you simply throw them away?

After all, the ETL process allows accumulation of historical data where it is needed. It also supports complex data quality operations to ensure absolute data consistency across sources, something that is essential when dealing with regulatory reporting requirements. While sales and marketing might be willing to trade off “good enough” data to gain more agility for market segmentation or customer analysis, your finance and regulatory departments need to be absolutely certain about the data’s veracity before they use it and are willing to wait for periodic reporting.

So, rather than get rid of ETL processes, organizations are opting to enhance them with other technologies such as data virtualization to embrace their new — and future — data sources and formats.

Solutions like data virtualization can breathe new life into existing ETL processes and help organizations embrace the multitude of different data formats and sources. While there is a common perception that data virtualization is trying to replace ETL, that is simply not true. While there will be fewer technical reasons for data replication and more business need for agile integration, persisted data and ETL are not going away anytime soon. There are numerous use cases where they complement each other and provide overall better results than either could if used alone.

It is now clear to most people that data virtualization is more than just data federation. It abstracts logical views, decouples systems, serves up data services and yes, of course, it also federates queries across disparate sources, often supported by partial persistence in cache or in-memory.

Data virtualization allows you to connect to any data source — internal or external, structured or unstructured — and integrate this data to create canonical views on the data (business entity views, such as “customer” or “product”). You can then expose these views as data services, supporting multiple formats and protocols such as SQL, Web services (SOAP/XML and RESTful), Portlets, Web Parts for SharePoint integration, JMS messaging, and so on.

A common pattern is data warehouse prototyping where, in the initial stages, data virtualization is used to shorten cycle times to define the business requirements for data and analytics and provide useful results quickly to end users directly from disparate sources. Once this is accomplished, companies find that there is some data that must be persisted and some that can remain virtualized.

In this case, the ETL tool points to the virtualization layer as its data source and in some cases adds some more complex cleansing operations (e.g., multi-pass loops) in ETL, and then loads the results in a data warehouse or data mart. In this evolution, there is still value in leaving the data virtualization layer, since new data sources or changes to underlying sources are much easier to manage in data virtualization than making changes to ETL jobs.

So ETL provides persistence, while data virtualization provides agility.

Another reason data virtualization is used as a source for ETL is to gain access to multi-structured data sources. Data virtualization does this much better and with more flexibility, enabling IT teams to bring them into their structured ETL/data warehouse world — not just for ETL processes, but for any application or tool that can utilize this data.

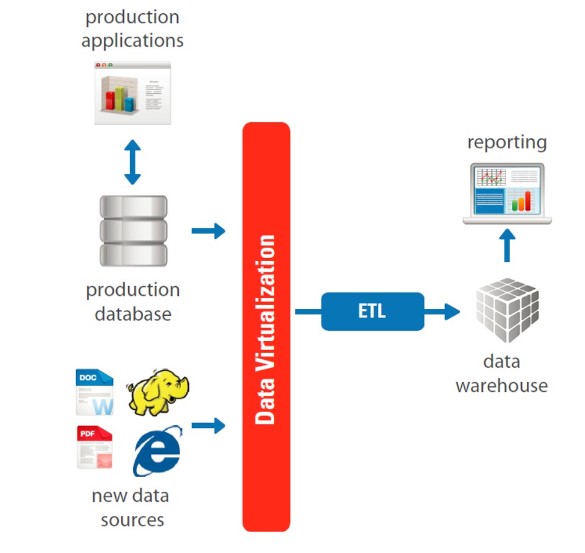

For example, you can use the data virtualization platform to easily connect to NoSQL, Web, social media, sensor data, and even unstructured documents and files, structure them and then expose them to the ETL tools for subsequent storage in your data warehouse (see Figure 1).

Figure 1 – Data Virtualization and ETL

Adapted from “Unleashing the Full Value of Big Data” Webinar

This is the simplest of cases and is applicable if the new data is suitable (i.e., it will conform to your data warehouse model) and necessary (i.e., need persistence) for storing in your data warehouse. But what happens if this is high-velocity data that isn’t suitable for your data warehouse but is needed for real-time or operational reporting purposes?

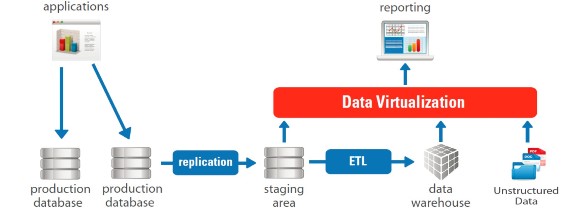

In this case, you can use the data virtualization platform to connect to the data source, combine it with data pulled from the data warehouse and expose it to your reporting tools (see Figure 2). If you are wondering if this is similar to using a semantic layer within a BI tool, you’re correct. But in this case multiple BI tools — and who doesn’t have more than one — can share the semantic layer, which also provides data services to other applications and users.

Figure 2 – Enhancing your Data Warehouse

Adapted from “Unleashing the Full Value of Big Data” Webinar

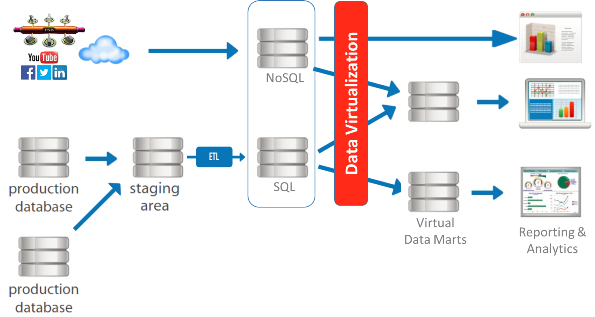

A third pattern is the use of data virtualization in conjunction with ETL in the world of Big Data. There are several sub-patterns — Big Data used as a staging area for ETL, as an analytical sandbox, as cold storage for less frequently used data, and a hybrid data warehousing scenario (see Figure 3).

Here, very large data volumes are analyzed using, say, Hadoop, while other data sits in a data warehouse. Data virtualization is used to combine them and present it to ETL tools for loading to data marts or directly expose them to other reporting/analytic tools. Modern data virtualization platforms have sophisticated query-optimization techniques including push-down delegation and caching, which ensure that complex workloads are handled with high performance.

Figure 3 – Hybrid Data Warehouse

Adapted from “Unleashing the Full Value of Big Data” Webinar

So before retiring tried-and-true solutions, remember the better option is often to marry the new with the old. Data virtualization can extend and enhance your existing ETL processes and data warehouses to encompass the new data sources, whether internal or external, structured or unstructured. This allows you to maintain your ETL jobs and processes, reduce some data replication where it makes sense and invest in expanding your tool kit to keep up with seemingly insatiable demands for new data and shorter delivery cycles.

Paul Moxon is senior director of product management and Suresh Chandrasekaran is SVP North America at Denodo Technologies, a leader in data virtualization. For more information contact pmoxon@denodo.com or suresh@denodo.com.