Welcome to the twilight zone of information management where every second of every day terabytes and petabytes of structured and unstructured information are relentlessly created by machines, humans and now their devices. The digital exhaust from social media, BYOD and telecommunications represent an almost unmanageable tsunami of information that is challenging nearly every organization, business and individual globally. Many organizations have hundreds of databases and information archives often in silos making it nearly impossible for anyone to find the right and related information for accurate and business-critical decision making.

The real challenge for organizations is that nearly 80 percent of all information is in an unstructured format making it difficult to search, navigate and organize. Knowledge workers spend 25 percent of their time searching for information, structured and unstructured data that don’t work well together and solutions that work well on the Web but not in the enterprise. As inherently analytical scientist types, we like to build our house on a rock of solid primary and secondary research.

In this article we explore the anatomy of Big Data from the perspectives of technology, emerging technologies, its challenges to organizations and individuals, the overall market, its business value and social media. Also included are some seminal insights from this year’s first Big Data Boot Camp held in May in NYC and a Big Data survey.

Big Data technology

The archaic databases of the 1980s aren’t designed for, agile or fast enough to manage all Big Data types and objects. Also, their price is prohibitively expensive for most organizations and the management of backup and recovery processes is extremely complex.

In our view, the most significant challenges that Big Data presents to the enterprise revolve around what we call the “four pillars of Big Data” and the characteristics and data types that make them easy or hard to manage.

The four pillars of Big Data

| Big Tables | Big Text | Big Metadata | Big Graphs |

| Structured | Unstructured | Data about data | Object connections |

| Relational | Natural language | Taxonomies | Subject predicate object |

| Tabular | Full text | Ontologies | Triple store |

| Rows and columns | Grammatical | Glossaries | Semantic discovery |

| Traditional | Semantic | Facets | Degrees of separation |

| Concepts | Linguistic analysis | ||

| Entities | Schema-free |

RDBMS, open source and Hadoop

There are many new open source and proprietary emerging technologies and platforms such as NoSQL databases that are designed to handle unstructured data, and a healthy ecosystem of solution and tool vendors has emerged around them. New innovative search enhancement tools such as Applied Relevance’s Epinomy automate taxonomy building and enable organizations to manage and organize unstructured data for high performance search and nearly real-time decision making. One of the best recent blogs on this area is Doculabs’ blog.

Hadoop is clearly the most important Big Data-enabling technology and is now rapidly becoming the heart of any modern data platform. Hadoop is a cost-effective and scalable platform for staging Big Data; however, some NoSQL databases, such as MarkLogic, for example, don’t really require Hadoop.

One of the most insightful presentations at this year’s Big Data Boot Camp came from a Big Data consultant, Alex Gorbochev. Below is a brief overview of his view of RDBMS, NoSQL, Big Data and Hadoop.

When RDBMS’s make no sense

- Storing images and video

- Processing images and video

- Storing and processing other large files

- PDFs, Excel files

- Processing large blocks of natural language text

- Blog posts, job ads, product descriptions

- Semi-structured data

- CSV, JSON, XML, log files

- Ad-hoc, exploratory analytics

- Integrating data from many volatile external sources

- Data clean-up tasks (data wrangling)

- Very advanced analytics (machine learning)

- Business domain knowledge is not well defined

Key benefits of Hadoop

- Reliable solution based on unreliable hardware

- Designed for large files

- Load data first, structure later

- Designed to maximize throughput of large scans

- Designed to leverage parallelism

- Designed to scale

- Flexible development platform

- Solution ecosystem

Some key use cases for Hadoop include analysis of customer behavior, optimization of ad placements, customized promotions and recommendation systems such as Netflix, Pandora and Amazon.

Hadoop also provides inexpensive archive storage with an ETL layer, transformation and data-cleansing engine. One of the key differentiators for Hadoop is reported to be its ability to support 100-1,000 Hadoop cluster nodes (unlike traditional RDBMS, which support maybe dozens of nodes).

Big Data and In-Memory

In-Memory databases and appliances are another welcome addition to the era of Big Data and business intelligence arsenal that is significantly changing and optimizing many business processes. In-Memory databases and appliances have the potential to allow organizations, line-of-business managers and the C-suite to spend more time on creating simulations, scenario planning and analysis of Big Data, and less time on building and waiting for queries. Check out this In-Memory article by Asteria Research on SandHill.

Big Data and graph databases

One of the most important technologies in the Big Data space is graph databases, which are sometimes faster than SQL and greatly enhance and extend the capabilities of predictive analytics by incorporating multiple data points and interconnections across multiple sources in real time. Predictive analytics and graph databases are a perfect fit for the social media landscape where various Big Data points are interconnected.

The Big Data market

According to Gartner, Big Data initiatives will drive more than $200 billion in IT spending over the next four years along with tremendous change in how Big Data is managed. McKinsey has predicted that businesses will get $3 trillion in business value from Big Data in the next several years.

But what is really going on in the Big Data space is the IT departments’ lemming-like move to inexpensive open source solutions that are facilitating the modernization of data centers and data warehouses. And at the center of this universe is Hadoop.

Only a few so-called or positioned “Big Data” companies are actually making money at this time from our perspective. Organizations are moving away from the traditional IT vendors and their business models that often come with pricey software and hardware systems, along with significant maintenance fees exceeding 22 percent per year.

In the evolution of the Big Data market, open source is playing a seminal role as the disruptive technology challenging the status quo.

In a recent survey of 300 data managers conducted by Information Today for the Big Data Boot Camp conference, we identified some interesting trends in the Big Data market, along with the top industries with Big Data projects, business initiatives and the types of data being used.

Top Big Data industries

- Retail

- Financial services

- Technology

- Manufacturing

- Government

- Education

- Telecommunications

Current Big Data business initiatives

- Customer analysis

- Historical data analysis

- Machine data, production system monitoring

- Website monitoring and analysis

- IT systems log monitoring and analysis

- Competitive market analysis

- Content management

- Social media analysis

Big Data signal types

- Production or transactional data

- Real-time data feeds

- Textual data

- ERP data

- CRM data

- Historical data

- Web logs

- Social media data

- Multimedia data

- Machine2machine data

- Sensor data

- Spatial data

Is Big Data Big Brother?

Big Data is a new frontier for data privacy and security. Many of us are now realizing that we are indeed “selling” our data on Facebook, Google, Twitter. In many ways we are not their customer; we are their product. All social media networks are collecting information about us and selling it to advertisers and other organizations that are targeting affinity groups and influencers related to their business.

“If My Data Is an Open Book, Why Can’t I Read It?” is an excellent May 26, 2013 article in The New York Times, which explores how much data is collected about us but more importantly how much of that (your) data you can access. In the case of wireless providers, you don’t get access to your location logs without a subpoena, and few including the social media kings are providing transparency as a feature to their customers/members like Amazon.

Consequently, you don’t own your own data, and at Google it’s really about data events!

Think about your data sphere. Below is partial list of what it could be.

Your Big Data sphere

- FICO scores

- Time-of-purchase data

- HIPAA

- Background checks

- Videos in your neighborhood

- City police surveillance

- Business surveillance

- Intersection and red-light monitoring

- Personal home surveillance

- ATMs

- YouTube

- Smart phone tracking

- Smart phone application use

- Social media interactions: Yelp, Facebook, Twitter, etc.

- Flickr

- Your blog

- Your search patterns

- Your blood pressure monitors, calorie counter and movement sensors

Social media and Big Data

Social media networks are creating large data sets that are now enabling companies and organizations to gain competitive advantage and improve performance by understanding customer needs and brand experience in nearly real time. These data sets provide important insights into real-time customer behavior, brand reputation and the overall customer experience.

Intelligent or data analysis-driven organizations are now monitoring, and some are collecting, these data from propriety social media networks such as Salesforce Chatter, Microsoft, Jammer and open social media networks like LinkedIn, Twitter, Facebook and others.

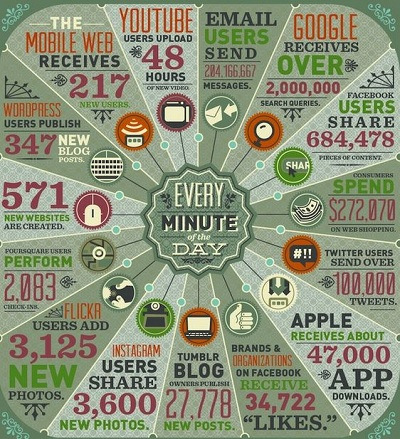

Figure 1

The majority of organizations today are not harvesting and staging Big Data from these networks but are leveraging a new breed of social media listening tools and social analytics platforms. Many are employing their public relations agencies to execute this new business process. Smarter data-driven organizations are extrapolating social media data sets and performing predictive analytics in real time and in house.

There are, however, significant regulatory issues associated with harvesting, staging and hosting social media data. These regulatory issues apply to nearly all data types in regulated industries such as healthcare and financial services in particular. SEC and FINRA with Sarbanes-Oxley require different types of electronic communications to be organized and indexed in a taxonomy schema and that they be archived and easily discoverable over defined time periods.

Data protection, security, governance and compliance have entered an entirely new frontier with introduction and management of social data.

Figure 2

Social media analytical tools identify and analyze text strings that contain targeted search terms, which are then loaded into databases or data-staging platforms such as Hadoop. This can enable database queries by, for example, data, region, keyword or sentiment. These queries can enable insights and analysis into customer attitudes toward brand, product, services, employees and partners.

The majority of products work at multiple levels and drill down into conversations. Results are depicted in customizable charts and dashboards as shown in the image above.

Social media-Big Data analytics

On the bleeding edge of social media analytics is a new wave of tools and highly integrated platforms that have emerged to provide social media listening tools and enable organizations to understand content preferences (or content intelligence) by affinity groups and what brands they are following or are trending.

There were several early leaders in this space; however, they were acquired (sucked into the vortex of their acquirers) and embedded into larger platforms, resulting in a loss of the original innovators and intellectual property. The good news is that the innovators are here.

Below is a short list of new vendors that are taking social media data to a new level.

Some new social analytic tools

- Attensity http://attensity.com

- Infinigraph http://www.infinigraph.com

- Brandwatch http://www.brandwatch.com

- BambooEngine http://www.manumatix.com

- Kapow http://kapow.com

- Crimson Hexagon http://www.crimsonhexagon.com

- Sysmos http://www.sysomos.com

- Simplymeasured http://simplymeasured.com

- Netbase http://www.netbase.com

- Gnip http://gnip.com

Varieties of Business-Critical Big Data

- Legal and regulatory

- Digital metrics

- Energy cost

- Senior monitoring

- Predictive

- Electronic trading

- Sustainability and environmental

- Supply chain

- Genetic

- Vehicle telematics

- Real time

- Oil prices

- Consumer behavior

- Social

- Emerging markets

- Location

- Disaster

- Product

Net/Net

The good news is Big Data provides many more new types of data for analysis that are seminal in this millennium, which is all about the new data-driven culture of real-time decision making.

As Brynjolfsson and McAfee MIT research shows, data-driven companies are in the top third in their respective industries and are five to six percent more profitable than those that are not data driven (HBR, October 2012).

Multiple new and diverse Big Data sets add additional parameters to some business models that weren’t available before. For many CEOs Big Data is all about potentially disruptive business-critical Big Data.

In summary, data is information and the characteristics of Big Data are all about high-volume velocity and a variety of information assets that facilitate new forms of decision-making that leverage these characteristics for competitive advantage.

Peter J. Auditore is principal researcher at Asterias Research, a consultancy focused on information management, traditional and social analytics and Big Data. He was a member of SAP’s Global Communications team for seven years and recently head of SAP Business Influencer Group. He is a veteran of four technology startups including Zona Research (cofounder), Hummingbird (VP marketing Americas), Survey.com (president) and Exigen Group, (VP corporate communications). He has over 20 years’ experience selling and marketing software worldwide.

George Everitt, CEO, founded Applied Relevance in 2006. Prior to AR, George was a senior consultant at Verity (10 years) and Autonomy (one year). While at Verity and Autonomy, George participated in dozens of professional services engagements worldwide implementing high-performance, super-scalable enterprise search applications. The breadth of George’s experience covers most industries from pharmaceutical, telecommunications, retail, manufacturing, public sector and financial services.