For those of you who follow the Discovery Channel show MythBusters, you know it is a play on the quote that is often stated by Adam Savage: “I reject your reality and substitute my own.” (The original source for the quote is from a 1974 episode of Dr. Who). When working with large companies doing data integration across the organization, I have come across a number of trends regarding how a company perceives the value of defining and implementing an enterprise data model. This perception directly impacts integration teams that are responsible for project delivery in and around data transformation and integration to produce services that conform to the data model (or not).

The goal of an enterprise data architect is to define an enterprise-wide vocabulary that provides an IT organization with a blueprint for describing data in a common and consistent way. However, the project nature of an integration team tends to push back on these concepts, creating an imbalance in an organization’s desire to achieve uniformity and consistency. The very nature of an integration project tends to involve taking two differently represented objects (think source and target), and transforming the source to match the target representation.

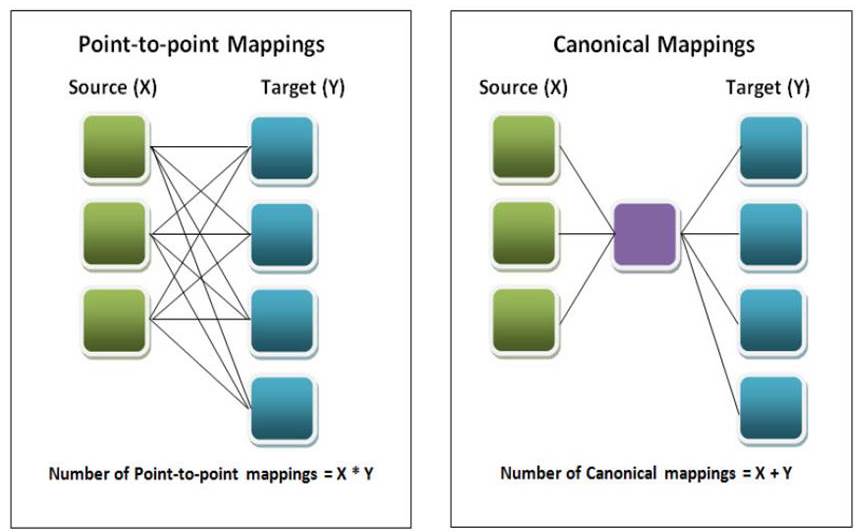

Simple example: A business receives purchase orders represented in the ASC X12 format, which is a flat file representation of hierarchical data. They want to translate these purchase orders to either an XML representation that is validated by an XSD that conforms to the enterprise data model or to a CSV layout that can be directly imported into the back-end accounting system that the data is ultimately intended for. The idea behind mapping this data to the intended target might be faster and easier, but often this comes at the cost of reusability and manageability as companies begin to see thousands of point-to-point maps over time.

On the other hand, using an enterprise data model approach requires mapping the source data to a common format and then mapping that common format to the target. This is known as the canonical approach to integration. Given these two options, a project team on a tight deadline may choose the easier route.

The diagrams below demonstrate how, over time, there’s a benefit in terms of complexity, reusability and management of an integration landscape by employing an enterprise data model versus those that do not.

This model gets fuzzier, though, when the integration team takes the EDM approach but doesn’t use it as intended. For example, a data model does not have an attribute to represent a customer’s shoe size. No matter; just stick it into an unused attribute for the service called “GTIN”– whatever that is. This haphazard approach is a major source of EDM breakdown that I often see. This occurs because the integration teams are generally using downstream artifacts that are produced by the EDM (such as an XSD representing the physical data model).

Ways to approach the EDM: top-down, bottom-up and middle-out

Companies that have invested time and material to try and define a top-down EDM for an entire organization can run into this type of issue fairly often. Many times, the big-bang approach to the EDM fails because organizations have spent too much time and money to bring in high-cost consultants to assess their IT landscape and define the model.

However, some organizations have successfully implemented a top-down EDM along with governance to assure that all parts of the organization produce solutions and ROI that conform to the EDM. The trouble is, this top-down approach to modeling can suffer multiple restarts and even end in failure and thus never get implemented. Other times, once implemented, teams simply stop adhering to the data model and either ignore it or reuse the model in ways never intended to complete projects. The end result is the EDM gets marginalized.

On the flipside, organizations can try to build an EDM from the bottom up. In this scenario, organizations start with nothing and slowly evolve the EDM as they work through projects. The challenge with this approach is that there’s no framework or discipline to guide the creation of the EDM. There’s also no guarantee that all systems and processes are represented; as a result, many siloed or legacy systems remain just that. If an organization is serious about establishing an EDM, this route often leads to multiple rewrites and iterations before reaching the desired outcome.

If starting from scratch is too daunting, companies can use predefined models/standards to jump-start their enterprise data model development. There are a few industry domain models in existence that companies can use as starting points. Some are industry specific, such as OAGIS, or vertical-specific schemas and models, like IFX for banking; and some are not.

Whether your organization has an EDM or not, there is a different approach that allows for more harmony across the organization, which ultimately leads to greater adoption. Allow the integration teams to participate and influence the data model over time as they implement real-world projects. In a nutshell, evolve your data model from the inside out. In order to successfully employ this middle-out approach, you’ll need both good process and good tools.

Into the minds of an enterprise architect and an integration analyst

I recently interviewed one of our senior solution engineers, Walter Lindsay, who has over 20 years of data integration expertise about this challenge:

“Enterprise architects, naturally, want project teams to think like architects. Architects want integration project teams to do extra steps like defining services based on an enterprise model based on a vocabulary. Integration teams are often happy to build services. But they want to build services that are like the back-end data they are exposing, which typically isn’t much like the enterprise model. Integration teams want to simplify the problem their project is addressing, which is likely to be hard as it is. Integration teams want to think like integration teams, not like enterprise architects. As a result, the teams building services too often ignore the needs of the enterprise architecture, and the organization gets farther away from a unified data architecture.”

To solve this problem, architects need to make the enterprise model easy for the integration team to use. Integration teams need autonomy and freedom to extend the model as needed, and flexibility to create usable service schemas out of the model. Architects then need an easy way to update the enterprise model so it is easier to use in future projects.

While coercion may be needed at the start to get project teams to want to use new tools and practices, this approach incents the enterprise architects to serve the integration teams so that future projects are consistently aligned with the vocabulary and model the architects need.

Giving the integration teams this kind of feedback into the data model allows the data model to evolve over time. There are several benefits to this approach:

- Data models can be implemented with lower up-front cost and adopted faster.

- Data models can evolve to more accurately match the IT and business landscaper of the organization.

- Various teams can stay in sync and collaborate on evolving the EDM, reducing the fragmentation that can otherwise occur.

To put it simply: no more data model rejection when teams that must use them have input while working on real-world deliverables. This is a dramatic change in mindset for an organization to think about. For companies that have put in a lot of time, money and effort to implement and maintain their EDM, this requires a new discipline to make it work. It also requires intelligent transformation and integration tools that are EDM aware. In the end, if it is done well, the quote can be changed to:

“I accept your data model and enhance it with my own.”

Robert Fox is the vice president of application development for Liaison Technologies and the architect for several of Liaison’s data integration solutions. Liaison Technologies is a global provider of cloud-based integration and data management services and solutions. Rob was an original contributor to the ebXML 1.0 specification, is the former chair of marketing and business development for ASC ANSI X12 and a co-founder and co-chair of the Connectivity Caucus.