As companies begin to decouple data from applications to enable richer services both internal and external to their organizations, new challenges arise that can both speed up as well as slow down adoption of data sharing using data-as-a-service offerings.

In the recent white paper published by Accenture, titled “Accenture Technology Vision 2012,” the company makes six predictions for game-changing technology trends. On the subject of the Industrial Data Services trend, it says that the “freedom to share data will make data more valuable – but only if it’s managed differently.”

Accenture points out eight dimensions of the value of data to an organization, which are difficult to measure in terms of actual dollars, as follows:

- Utility

- Uniqueness or exclusivity

- Ease of production

- Usage and sharing restrictions

- Usability and integration

- Trustworthiness

- Support

- Consumer demand

The difficulty in shifting to an architecture that enables data as a service (DaaS) lies in a change in philosophy that CIOs have held for years. Who owns data? Is it the application, the application group, the organization, or …? To answer these questions, first consider the value of the data based on Accenture’s eight dimensions. However, to truly understand the value of DaaS and the shifting philosophy of data ownership to data stewardship, defining the data value chain is the first step.

Data value chain

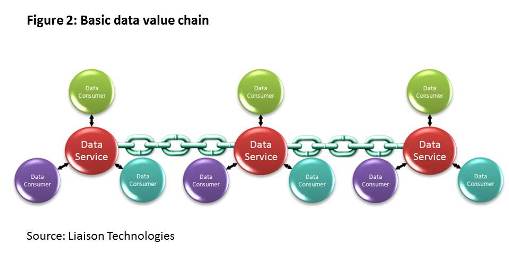

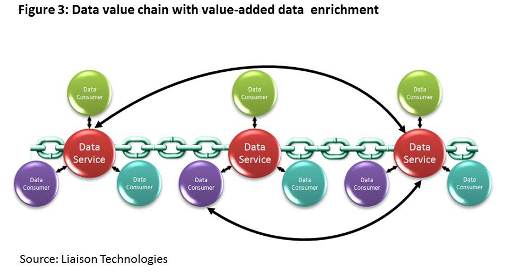

The real value of data as a service can be measured by the length of the data value chain. When a company decouples data and makes it available for consumption as a service, they add value to the data value chain. The service can be used by the next “hop” to add value. The challenge is when consumers use the service to access the federated data and create their own isolated data pool silos. Currently, most data as services tend to look like a one-directional hub-and-spoke model.

In this example, the data-as-a-service model is not being truly leveraged as a data value chain. Think of the example that Accenture gives where a company is grabbing data from its customer relationship management (CRM) system to study loyalty trends and, in the process, the marketing group creates its own isolated data pool, which is not usable by anyone else. What’s even worse is if that data pool isn’t constantly refreshed by the originating source. This model (Fig. 1) creates a break in the data value chain that causes data to quickly become stale and inaccurate.

Alternatively, a healthy data value chain will have many consumers who may not continue the chain but won’t store a snapshot view of the data they use. Instead, data will freely flow in and out of the core data sets as needed. The goal is to lay out the services in a way that produces a healthy ecosystem of data services along the value chain.

Figure 2 shows the value of interweaving DaaS providers to increase the value of data and promote data reuse. The synergistic value comes when two or more services can feed each other value. This enables the ecosystem to grow the data value chain by way of enrichment between data consumers and data producers.

The challenge facing organizations is twofold: 1) to show the value in decoupling data from applications and 2) to create an open service-oriented architecture that allows the data to be shared both inside and outside of the organization.

Use case examples

Let’s take a look at a couple of real examples of how a federated data-as-a service model can produce benefits not possible by traditional data management approaches.

Use Case 1: Secondary use of personal health data

An example of how setting data free can produce tangible benefits is illustrated by the sharing of health data via data-sharing services. The ability to decouple patient record data and aggregate it benefits medical facilities and practitioners by allowing them to:

- Monitor the health of the population

- Identify populations at high risk of disease

- Determine the effectiveness of treatment

- Quantify prognosis and survival

- Assess the usefulness of preventive strategies, diagnostic tests and screening programs

- Inform health policy through studies on cost-effectiveness

- Support administrative functions

- Monitor the adequacy of care.

Without decoupling and sharing the data via data services, these health benefits are extremely difficult, if not impossible, to achieve.

Use Case 2: GS1 Global Data Synchronization Network (GDSN) benefits to suppliers

GS1 allows suppliers, customers, manufacturers and producers to publish and synchronize product data into global data pools. Accenture and Capgemini have published reports describing the business benefits of GDSN based on extensive research with major associations, suppliers and retailers including Royal Ahold, The Coca-Cola Company, General Mills, The Hershey Company, The J.M. Smucker Company, Johnson & Johnson, Nestlé, PepsiCo, Procter & Gamble, Sara Lee, The Gillette Company, Unilever and Wegmans.

The results clearly show that synchronizing accurate and properly classified data brings many business benefits to suppliers. For example, time-to-shelf was reduced by an average of two to six weeks, order and item administration improved by 67 percent, and item data issues created during the sales process were reduced by an average of 25 to 55 percent (source: http://www.gs1.org/gdsn/ds/suppliers).

The business benefits include:

- Better category and promotion management

- Easier administrative data handling

- Smoother logistics

- Increased operational efficiencies

- Increased customer satisfaction

- Improved bottom line

Challenges

1. Evaluate data silos – LOE and cost barriers

Not all applications can have data decoupled and shared. An organization has to survey its applications and data sources and identify those that can be decoupled and those that can’t. The level of effort required to decouple data must also be weighed against the estimated value of doing it.

In many cases, the value of retiring the data store can be evaluated. For example, if a company loads in and statically stores tax tables, this can be painful to maintain and costly if the data gets stale. Even if the data can be decoupled and shared across multiple systems that can benefit from such access, the company may be better served by abandoning the data store altogether and subscribing to a data service that can provide tax table information in real time.

2. Privacy concerns

When an organization decides to share data to other applications and services outside of the current application or departmental walls — whether it is to other departments within the organization or to external organizations — privacy becomes a big concern.

Looking at our example related to the sharing of healthcare data for secondary use, the federal government passed the Health Insurance Portability and Accountability Act (HIPAA) Privacy Rules to ensure patient privacy of shared data.

3. Security concerns

When a company decides to enable data services, security is another area of general concern. Who can access the data, and how? Limiting access implies access control, which needs to be managed. If the data is going to be exchanged, especially between networks, will it be secure, and if so, how?

In light of recent PCI DSS Level 2 compliance breaches (credit card data privacy), the movement of data and the risk of unwarranted access can be difficult to prevent without a solid security plan in place to protect the data and control access to that data.

4. Falling short of true value

In addition to the cost of making data available as a service, companies also need to evaluate the value of doing it by answering these questions:

- Will any other service or application benefit from the availability of the data in question?

- Does the decoupling of the data allow for an upgrade and retirement path for a legacy application?

There are plenty of cases where the value simply isn’t there, so the utility of free data needs to be carefully weighed.

5. Data governance

Publishing and subscribing to data services require data governance to ensure the accuracy of the information being shared. We must have confidence that the data we receive and the data we submit is validated and harmonized.

For example, we trust that a page on Wikipedia is accurate because there’s a process in place to ensure the information is verified and corrected to be accurate.

Like Wikipedia, the data value chain has to have data governance built into it to ensure the data available for use is current, complete and accurate. Therefore, individuals and organizations that participate in the data value chain as non-terminating links have the responsibility to be data stewards. A data steward maintains data quality by ensuring that data:

- Has clear and unambiguous data element definition

- Does not conflict with other data elements in the metadata registry (remove duplicates, overlaps, etc.)

- Has clear enumerated value definitions

- Is still being used (remove unused data elements)

- Is being used consistently in various computer systems

- Has adequate documentation on appropriate usage and notes

- Documents the origin and sources of authority on each metadata element.

While these practices are hard to enforce, the goal is to make sure that no single point in the chain is the authority on correctness, but rather that each link plays a role in ensuring that the data is good.

Summary

Before a true revolution in data as a service can occur, organizations must be convinced of the value. While value of an IT change is traditionally measured in ROI, the benefits of decoupling data from applications for sharing across the extended enterprise are far-reaching benefits that can’t always be quantified by sheer monetary savings or gains. In the “Accenture Technology Vision 2012” whitepaper, Accenture says it best:

“Put simply, increased sharing of data through data services calls for a radical rethinking of how IT should handle data management. Essentially, data management shifts from being an IT capability buried within application support to a collaborative effort that enables data to be used far beyond the applications that created it.”

Is your organization a link or a break in the data value chain?

Robert Fox is the Senior Director of Software Development at Liaison Technologies, a global provider of secure cloud-based integration and data management services and solutions based in Atlanta. An original contributor to the ebXML 1.0 specification, the former Chair of Marketing and Business Development for ASC ANSI X12, and a co-founder and co-chair of the Connectivity Caucus, he can be reached at rfox@liaison.com.