The containers trend that took off in late 2014 will continue to remain a hot-button item in the 2015 DevOps universe. This article provides an overview of the current state of solutions and providers as well as the major benefits that container technology provides for business and software development.

Brief historical overview

Within the last 30 years, product and service delivery underwent significant evolution. Five key states can be identified:

- Got everything: Code source and precompiled binaries for an officially supported OS platform (both for commercial and open source software). During the 1880s, source code was the only way to support compatibility across the zoo of OS platforms (more than 50 main OS and platforms were on the market). As a result, IT departments spent a lot time on support software solutions.

- First step: Packages and binaries. In the early 1990s, the whole market dropped to only five or six major players, and the cost of software development grew exponentially. As a result, software development switched to precompiled binaries and packages with protection and encryption.

- Heavy artillery: Virtual Machines Golden Images. In the early 2000s, monolithic software became so complicated and dependent on so many external components that support became extremely difficult for IT departments.

- Back to the roots: Source code, packages and automated provisioning. In the era of cloud solutions (AWS, OpenStack, CloudStack), when scripting languages became more and more popular, the industry returned to its roots. The most popular method became using OS-dependent packages (MSI, RPM, DEB), automated deployment and configuration with special frameworks (Puppet, Chef). But this approach required tangible investments in automation deployment development.

- Mixed approach: Independent containers and discovery configuration. This is where we are today.

Container technology

If you are already familiar with OpenStack technology, you can consider Docker Engine as a simplified version.

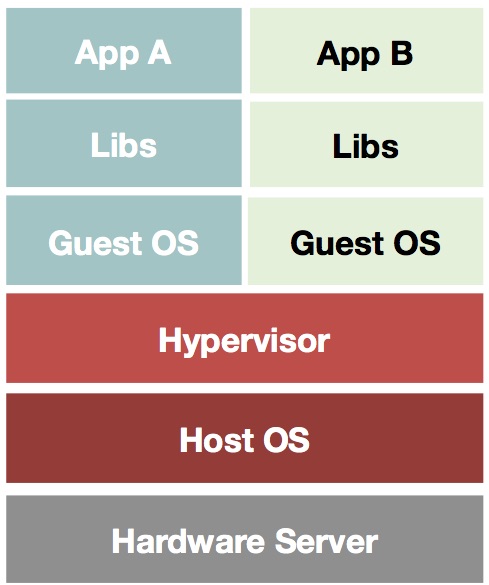

Docker is a container virtualization technology that offers a more lightweight approach to application deployment than most organizations currently implement. With a traditional virtualization hypervisor like VMware vSphere, Microsoft Hyper-V or the open-source Xen and KVM technologies, each virtual machine (VM) needs its own operating system and hardware replication. In contrast to such traditional virtualization hypervisors, with Docker, applications are isolated inside a container that resides on a single host OS.

Figure 1: Classical virtualization – schema

Each virtualized application includes not only the application and the necessary binaries and libraries but also an entire guest operating system.

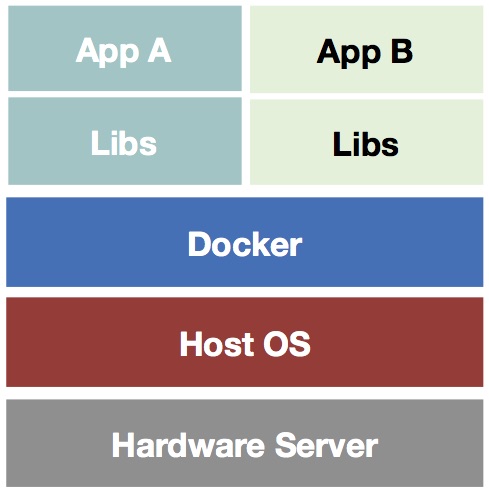

Figure 2: Docker containers – schema

The Docker Engine container includes only the application and its dependencies. It runs as an isolated process in user space on the host operating system, sharing the kernel.

The containers idea is nothing new. Sun implemented containers and zones technology more than 10 years ago in the Solaris OS. This technology has already been proved and used by enterprises and now is available with any Linux-based OS system.

And it’s going mainstream. Microsoft Corp. and Docker Inc. announced a strategic partnership to provide Docker Engine with support for new container technologies that will be delivered in a future release of Windows Server.

In the near future, developers and organizations that want to create container applications with Docker will be able to use either Windows Server or Linux.

Container solutions and providers: overview and comparison

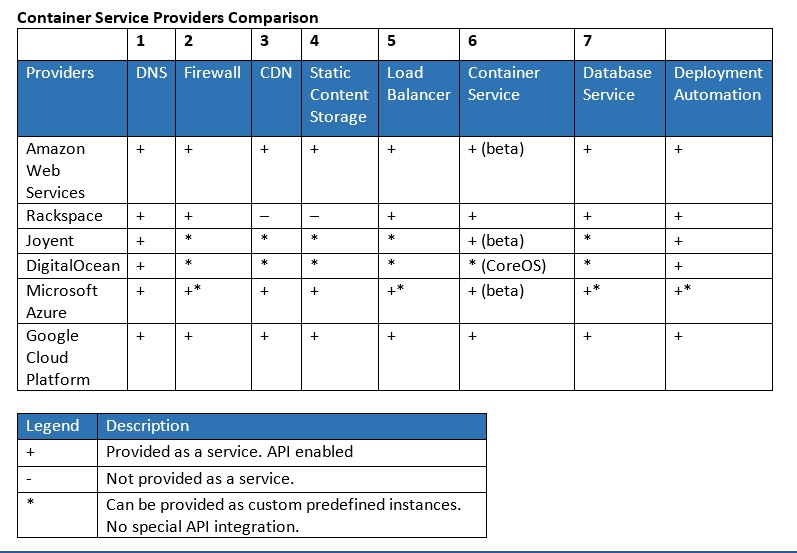

Let’s look at the major providers of containers as a platform for hosting typical Web applications.

Any typical B2C or B2B Web application is built on top of .Net or Java, or any other technology stack that can ensure at least eight major infrastructure components:

- DNS and Discovery service

- Firewall and security

- Content Delivery Network (CDN)

- Static Content and Resource service (for images, audio and video, JS, static pages, etc.)

- Load Balancer service

- Computation/Containers services for Web and API (for mobile applications, etc.) and applications services

- Database service

- Deployment automation service

Figure 3: Generic Web applications schema

You can build these services from the ground base on open source or proprietary software or reuse services provided by cloud platform or container frameworks.

Table 1

Container services is a new business for cloud providers, and most of them are not yet ready for production-grade use. Even though Google Cloud Platform and Amazon Web Services (AWS) provide better container services than all the others listed in the table above, they still do not have full integration with Docker API and cannot manage network connection and security between containers on one host machine.

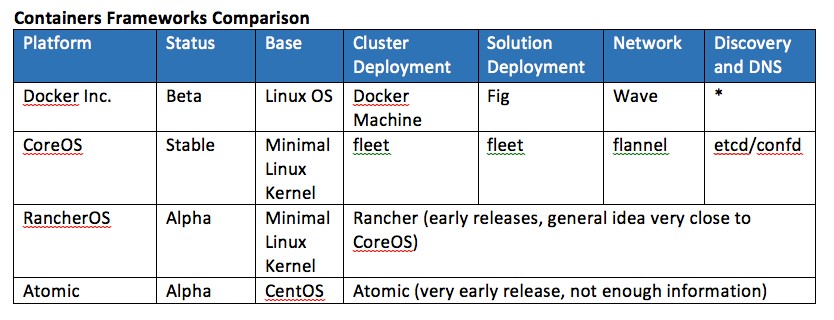

Docker Engine frameworks

The situation is much better when it comes to the Docker Engine frameworks for companies building their own Docker cluster. The open source community has already provided fully implemented and well-tested frameworks based on CoreOS.

CoreOS includes all the required major components from small and very stable host OS with Docker Engine to deployment automation and a simple but powerful scheduler.

Actually, with CoreOS and Mesos or Kubernets, any experienced engineer can build better solutions than those provided by Amazon or Azure and almost as high quality as Google Cloud Platform.

This kind of solution would be best for companies that:

- Cannot use public clouds for security reason

- Are “old style” data centers

About four years ago, OpenStack promised to provide tools for building private clouds. But the complexity of OpenStack architecture and implementation has reached such a level that only a few technical companies in the world can afford to build such solutions.

With CoreOS and Mesos or Kubernets, most SMB or enterprise companies can afford to have their own private cloud with no need to rely on unstable public clouds.

Docker Engine frameworks must be especially interesting for “old style” data centers. With solutions like Mesosphere (DCOS), data centers can reuse two- to four-year-old (but still good enough) hardware to provide public or private containers hosting services for SMB clients and significantly improve return on investment.

Table 2

Conclusion

If your company is interested in Docker Engine containers, I highly recommend you start testing and using containers hosting providers for development and testing purposes. Google Cloud Platform is ready for production solutions. Based on current progress, it looks like AWS and Microsoft Azure will be ready for hosting large production services in the next 6 to 12 months.

Docker containers technology is ready to use in the real world, and many companies such as Google, AirBnB and Mailgun have already implemented containers technology for large production applications. If you still worry about the security and maturity of this technology, I definitely recommend you start using containers technology for development and testing the live cycle.

Cisco and IBM, Paypal and RackSpace have already used Docker Engine and highly improved scalability and time to market for their own services and products. Do not hesitate to try it!

Myroslav Rys is an infrastructure and operations management expert at SoftServe, Inc.. He has more than 15 years of experience in software development. Myroslav is also an organizer for the Lviv DevOps Meetup.